In his new book entitled Landmarks author Robert Macfarlane ponders the relationship of words to our natural landscape. Reviewers describe the book as a “field guide to the literature of nature”. Sadly, Macfarlane’s detailed research for the book chronicles a disturbing trend: the culling of many words from our everyday lexicon that describe our natural world to make way for the buzzwords of progress. This substitution comes in the form of newer memes that describe our narrow, urbanized and increasingly virtual world circumscribed by technology. Macfarlane cited Oxford Junior Dictionary (OJD) as a vivid example of the evisceration of our language of landscape. The OJD has removed words such as acorn, beech, conker, dandelion, heather, heron, kingfisher, pasture and willow. In their place we now find words like attachment, blog, broadband, bullet-point, celebrity, chatroom, cut-and-paste, MP3 player and voice-mail. Get the idea?

I’m no fundamentalist luddite — I’m writing a blog after all — but surely some aspects of our heritage warrant protection. We are an intrinsic part of the natural environment despite our increasing urbanization. Don’t we all crave the escape to a place where we can lounge under a drooping willow surrounded by nothing more than the buzzing of insects and the babbling of a stream. I’d rather that than deal with the next attachment or voice-mail.

What a loss it would be for our children, and a double-edged loss at that. We, the preceding generation continue to preside over the systematic destruction of our natural landscape. And, in doing so we remove the words as well — the words that once described what we still crave.

From the Guardian:

Eight years ago, in the coastal township of Shawbost on the Outer Hebridean island of Lewis, I was given an extraordinary document. It was entitled “Some Lewis Moorland Terms: A Peat Glossary”, and it listed Gaelic words and phrases for aspects of the tawny moorland that fills Lewis’s interior. Reading the glossary, I was amazed by the compressive elegance of its lexis, and its capacity for fine discrimination: a caochan, for instance, is “a slender moor-stream obscured by vegetation such that it is virtually hidden from sight”, while a feadan is “a small stream running from a moorland loch”, and a fèith is “a fine vein-like watercourse running through peat, often dry in the summer”. Other terms were striking for their visual poetry: rionnach maoim means “the shadows cast on the moorland by clouds moving across the sky on a bright and windy day”; èit refers to “the practice of placing quartz stones in streams so that they sparkle in moonlight and thereby attract salmon to them in the late summer and autumn”, and teine biorach is “the flame or will-o’-the-wisp that runs on top of heather when the moor burns during the summer”.

The “Peat Glossary” set my head a-whirr with wonder-words. It ran to several pages and more than 120 terms – and as that modest “Some” in its title acknowledged, it was incomplete. “There’s so much language to be added to it,” one of its compilers, Anne Campbell, told me. “It represents only three villages’ worth of words. I have a friend from South Uist who said her grandmother would add dozens to it. Every village in the upper islands would have its different phrases to contribute.” I thought of Norman MacCaig’s great Hebridean poem “By the Graveyard, Luskentyre”, where he imagines creating a dictionary out of the language of Donnie, a lobster fisherman from the Isle of Harris. It would be an impossible book, MacCaig concluded:

A volume thick as the height of the Clisham,

A volume big as the whole of Harris,

A volume beyond the wit of scholars.

The same summer I was on Lewis, a new edition of the Oxford Junior Dictionarywas published. A sharp-eyed reader noticed that there had been a culling of words concerning nature. Under pressure, Oxford University Press revealed a list of the entries it no longer felt to be relevant to a modern-day childhood. The deletions included acorn, adder, ash, beech, bluebell, buttercup, catkin, conker, cowslip, cygnet, dandelion, fern, hazel, heather, heron, ivy, kingfisher, lark, mistletoe, nectar, newt, otter, pasture and willow. The words taking their places in the new edition included attachment, block-graph, blog, broadband, bullet-point, celebrity, chatroom, committee, cut-and-paste, MP3 player and voice-mail. As I had been entranced by the language preserved in the prose?poem of the “Peat Glossary”, so I was dismayed by the language that had fallen (been pushed) from the dictionary. For blackberry, read Blackberry.

I have long been fascinated by the relations of language and landscape – by the power of strong style and single words to shape our senses of place. And it has become a habit, while travelling in Britain and Ireland, to note down place words as I encounter them: terms for particular aspects of terrain, elements, light and creaturely life, or resonant place names. I’ve scribbled these words in the backs of notebooks, or jotted them down on scraps of paper. Usually, I’ve gleaned them singly from conversations, maps or books. Now and then I’ve hit buried treasure in the form of vernacular word-lists or remarkable people – troves that have held gleaming handfuls of coinages, like the Lewisian “Peat Glossary”.

Not long after returning from Lewis, and spurred on by the Oxford deletions, I resolved to put my word-collecting on a more active footing, and to build up my own glossaries of place words. It seemed to me then that although we have fabulous compendia of flora, fauna and insects (Richard Mabey’s Flora Britannica and Mark Cocker’s Birds Britannica chief among them), we lack a Terra Britannica, as it were: a gathering of terms for the land and its weathers – terms used by crofters, fishermen, farmers, sailors, scientists, miners, climbers, soldiers, shepherds, poets, walkers and unrecorded others for whom particularised ways of describing place have been vital to everyday practice and perception. It seemed, too, that it might be worth assembling some of this terrifically fine-grained vocabulary – and releasing it back into imaginative circulation, as a way to rewild our language. I wanted to answer Norman MacCaig’s entreaty in his Luskentyre poem: “Scholars, I plead with you, / Where are your dictionaries of the wind … ?”

Read the entire article here and then buy the book, which is published in March 2015.

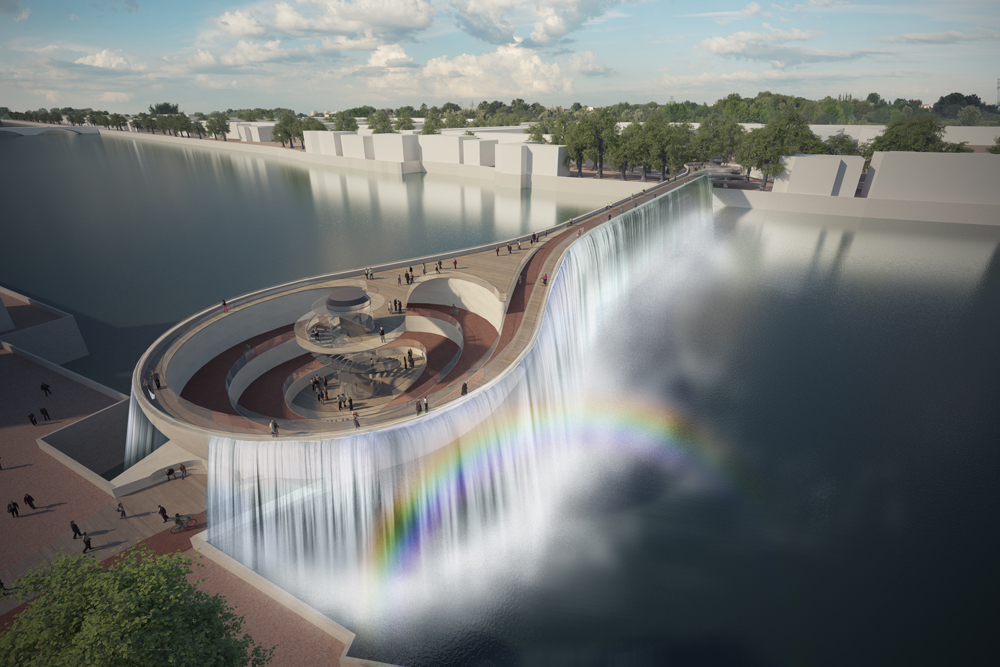

Image: Sunset over the Front Range. Courtesy of the author.