Suicide still ranks highly in many cultures as one of the commonest ways to die. The statistics are sobering — in 2012, more U.S. soldiers committed suicide than died in combat. Despite advances in the treatment of mental illness, little has made a dent in the annual increase in the numbers of those who take their lives. Psychologist Matthew Nock hopes to change this through some innovative research.

From the New York Times:

For reasons that have eluded people forever, many of us seem bent on our own destruction. Recently more human beings have been dying by suicide annually than by murder and warfare combined. Despite the progress made by science, medicine and mental-health care in the 20th century — the sequencing of our genome, the advent of antidepressants, the reconsidering of asylums and lobotomies — nothing has been able to drive down the suicide rate in the general population. In the United States, it has held relatively steady since 1942. Worldwide, roughly one million people kill themselves every year. Last year, more active-duty U.S. soldiers killed themselves than died in combat; their suicide rate has been rising since 2004. Last month, the Centers for Disease Control and Prevention announced that the suicide rate among middle-aged Americans has climbed nearly 30 percent since 1999. In response to that widely reported increase, Thomas Frieden, the director of the C.D.C., appeared on PBS NewsHour and advised viewers to cultivate a social life, get treatment for mental-health problems, exercise and consume alcohol in moderation. In essence, he was saying, keep out of those demographic groups with high suicide rates, which include people with a mental illness like a mood disorder, social isolates and substance abusers, as well as elderly white males, young American Indians, residents of the Southwest, adults who suffered abuse as children and people who have guns handy.

But most individuals in every one of those groups never have suicidal thoughts — even fewer act on them — and no data exist to explain the difference between those who will and those who won’t. We also have no way of guessing when — in the next hour? in the next decade? — known risk factors might lead to an attempt. Our understanding of how suicidal thinking progresses, or how to spot and halt it, is little better now than it was two and a half centuries ago, when we first began to consider suicide a medical rather than philosophical problem and physicians prescribed, to ward it off, buckets of cold water thrown at the head.

“We’ve never gone out and observed, as an ecologist would or a biologist would go out and observe the thing you’re interested in for hours and hours and hours and then understand its basic properties and then work from that,” Matthew K. Nock, the director of Harvard University’s Laboratory for Clinical and Developmental Research, told me. “We’ve never done it.”

It was a bright December morning, and we were in his office on the 12th floor of the building that houses the school’s psychology department, a white concrete slab jutting above its neighbors like a watchtower. Below, Cambridge looked like a toy city — gabled roofs and steeples, a ribbon of road, windshields winking in the sun. Nock had just held a meeting with four members of his research team — he in his swivel chair, they on his sofa — about several of the studies they were running. His blue eyes matched his diamond-plaid sweater, and he was neatly shorn and upbeat. He seemed more like a youth soccer coach, which he is on Saturday mornings for his son’s first-grade team, than an expert in self-destruction.

At the meeting, I listened to Nock and his researchers discuss a study they were collaborating on with the Army. They were calling soldiers who had recently attempted suicide and asking them to explain what they had done and why. Nock hoped that sifting through the interview transcripts for repeated phrasings or themes might suggest predictive patterns that he could design tests to catch. A clinical psychologist, he had trained each of his researchers how to ask specific questions over the telephone. Adam Jaroszewski, an earnest 29-year-old in tortoiseshell glasses, told me that he had been nervous about calling subjects in the hospital, where they were still recovering, and probing them about why they tried to end their lives: Why that moment? Why that method? Could anything have happened to make them change their minds? Though the soldiers had volunteered to talk, Jaroszewski worried about the inflections of his voice: how could he put them at ease and sound caring and grateful for their participation without ceding his neutral scientific tone? Nock, he said, told him that what helped him find a balance between empathy and objectivity was picturing Columbo, the frumpy, polite, persistently quizzical TV detective played by Peter Falk. “Just try to be really, really curious,” Nock said.

That curiosity has made Nock, 39, one of the most original and influential suicide researchers in the world. In 2011, he received a MacArthur genius award for inventing new ways to investigate the hidden workings of a behavior that seems as impossible to untangle, empirically, as love or dreams.

Trying to study what people are thinking before they try to kill themselves is like trying to examine a shadow with a flashlight: the minute you spotlight it, it disappears. Researchers can’t ethically induce suicidal thinking in the lab and watch it develop. Uniquely human, it can’t be observed in other species. And it is impossible to interview anyone who has died by suicide. To understand it, psychologists have most often employed two frustratingly imprecise methods: they have investigated the lives of people who have killed themselves, and any notes that may have been left behind, looking for clues to what their thinking might have been, or they have asked people who have attempted suicide to describe their thought processes — though their mental states may differ from those of people whose attempts were lethal and their recollections may be incomplete or inaccurate. Such investigative methods can generate useful statistics and hypotheses about how a suicidal impulse might start and how it travels from thought to action, but that’s not the same as objective evidence about how it unfolds in real time.

Read the entire article here.

Image: 2007 suicide statistics for 15-24 year-olds. Courtesy of Crimson White, UA.

There is no doubting that technology’s grasp finds us at increasingly younger ages. No longer is it just our teens constantly mesmerized by status updates on their mobiles, and not just our “in-betweeners” addicted to “facetiming” with their BFFs. Now our technologies are fast becoming the tools of choice for our kindergarteners and pre-K kids. Some parents lament.

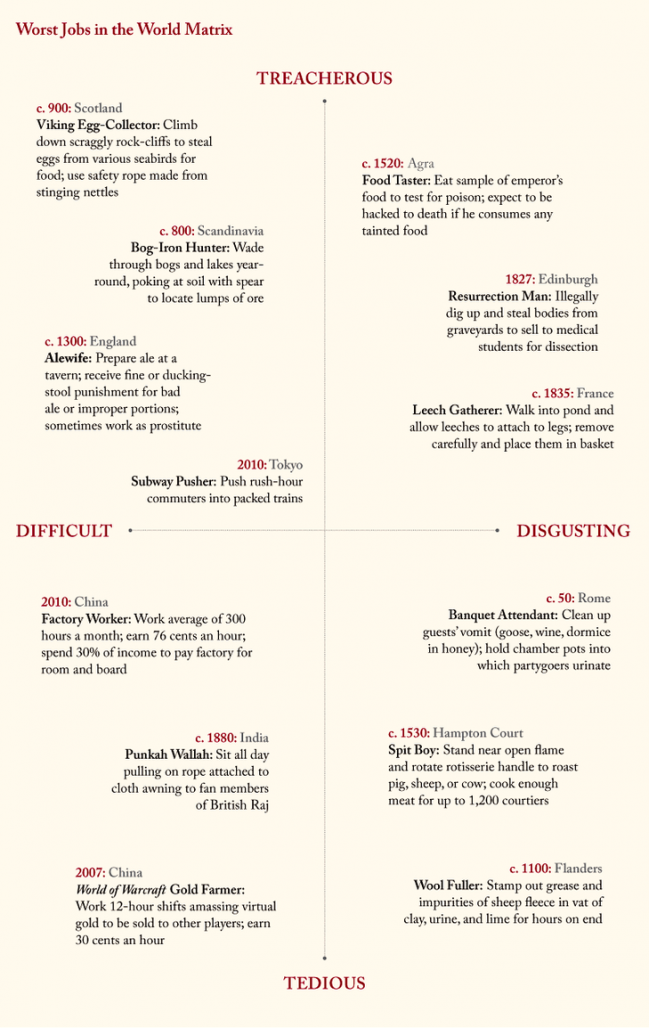

There is no doubting that technology’s grasp finds us at increasingly younger ages. No longer is it just our teens constantly mesmerized by status updates on their mobiles, and not just our “in-betweeners” addicted to “facetiming” with their BFFs. Now our technologies are fast becoming the tools of choice for our kindergarteners and pre-K kids. Some parents lament. Technology is altering the lives of us all. Often it is a positive influence, offering its users tremendous benefits from time-saving to life-extension. However, the relationship of technology to our employment is more complex and usually detrimental.

Technology is altering the lives of us all. Often it is a positive influence, offering its users tremendous benefits from time-saving to life-extension. However, the relationship of technology to our employment is more complex and usually detrimental. The world of fiction is populated with hundreds of different genres — most of which were invented by clever marketeers anxious to ensure vampire novels (teen / horror) don’t live next to classic works (literary) on real or imagined (think Amazon) book shelves. So, it should come as no surprise to see a new category recently emerge: cli-fi.

The world of fiction is populated with hundreds of different genres — most of which were invented by clever marketeers anxious to ensure vampire novels (teen / horror) don’t live next to classic works (literary) on real or imagined (think Amazon) book shelves. So, it should come as no surprise to see a new category recently emerge: cli-fi. Research shows how children as young as four years empathize with some but not others. It’s all about the group: which peer group you belong to versus the rest. Thus, the uphill struggle to instill tolerance in the next generation needs to begin very early in life.

Research shows how children as young as four years empathize with some but not others. It’s all about the group: which peer group you belong to versus the rest. Thus, the uphill struggle to instill tolerance in the next generation needs to begin very early in life.

Soon courtesy of Amazon, Google and other retail giants, and of course lubricated by the likes of the ubiquitous UPS and Fedex trucks, you may be able to dispense with the weekly or even daily trip to the grocery store. Amazon is expanding a trial of its same-day grocery delivery service, and others are following suit in select local and regional tests.

Soon courtesy of Amazon, Google and other retail giants, and of course lubricated by the likes of the ubiquitous UPS and Fedex trucks, you may be able to dispense with the weekly or even daily trip to the grocery store. Amazon is expanding a trial of its same-day grocery delivery service, and others are following suit in select local and regional tests. Professor of Philosophy Gregory Currie tackles a thorny issue in his latest article. The question he seeks to answer is, “does great literature make us better?” It’s highly likely that a poll of most nations would show the majority of people believe that literature does in fact propel us in a forward direction, intellectually, morally, emotionally and culturally. It seem like a no-brainer. But where is the hard evidence?

Professor of Philosophy Gregory Currie tackles a thorny issue in his latest article. The question he seeks to answer is, “does great literature make us better?” It’s highly likely that a poll of most nations would show the majority of people believe that literature does in fact propel us in a forward direction, intellectually, morally, emotionally and culturally. It seem like a no-brainer. But where is the hard evidence?

The Cold War between the former U.S.S.R and the United States brought us the perfect acronym for the ultimate human “game” of brinkmanship — it was called MAD, for mutually assured destruction.

The Cold War between the former U.S.S.R and the United States brought us the perfect acronym for the ultimate human “game” of brinkmanship — it was called MAD, for mutually assured destruction. Paradoxically the law and common sense often seem to be at odds. Justice may still be blind, at least in most open democracies, but there seems to be no question as to the stupidity of much of our law.

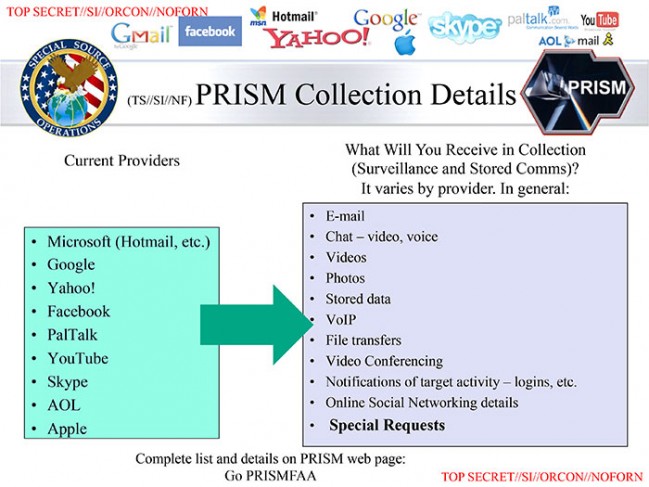

Paradoxically the law and common sense often seem to be at odds. Justice may still be blind, at least in most open democracies, but there seems to be no question as to the stupidity of much of our law. It is strange to see the reaction to a remarkable disclosure such as that by the leaker / whistleblower Edward Snowden about the National Security Agency (NSA) peering into all our daily, digital lives. One strange reaction comes from the political left: the left desires a broad and activist government, ready to protect us all, but decries the NSA’s snooping. Another odd reaction comes from the political right: the right wants government out of people’s lives, but yet embraces the idea that the NSA should be looking for virtual skeletons inside people’s digital closets.

It is strange to see the reaction to a remarkable disclosure such as that by the leaker / whistleblower Edward Snowden about the National Security Agency (NSA) peering into all our daily, digital lives. One strange reaction comes from the political left: the left desires a broad and activist government, ready to protect us all, but decries the NSA’s snooping. Another odd reaction comes from the political right: the right wants government out of people’s lives, but yet embraces the idea that the NSA should be looking for virtual skeletons inside people’s digital closets. It’s safe to suggest that most of us above a certain age — let’s say 30 — wish to stay young. It is also safer to suggest, in the absence of a solution to this first wish, that many of us wish to age gracefully and happily. Yet for most of us, especially in the West, we age in a less dignified manner in combination with colorful medicines, lengthy tubes, and unpronounceable procedures. We are collectively living longer. But, the quality of those extra years leaves much to be desired.

It’s safe to suggest that most of us above a certain age — let’s say 30 — wish to stay young. It is also safer to suggest, in the absence of a solution to this first wish, that many of us wish to age gracefully and happily. Yet for most of us, especially in the West, we age in a less dignified manner in combination with colorful medicines, lengthy tubes, and unpronounceable procedures. We are collectively living longer. But, the quality of those extra years leaves much to be desired. On June 9, 2013 we lost Iain Banks to cancer. He was a passionate human(ist) and a literary great.

On June 9, 2013 we lost Iain Banks to cancer. He was a passionate human(ist) and a literary great.

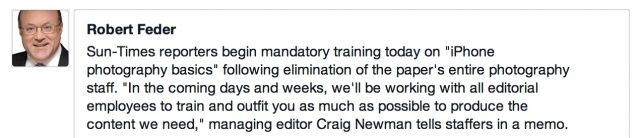

Really, it was only a matter of time. First, digital cameras killed off their film-dependent predecessors and then dealt a death knell for Kodak. Now social media and the #hashtag is doing the same to the professional photographer.

Really, it was only a matter of time. First, digital cameras killed off their film-dependent predecessors and then dealt a death knell for Kodak. Now social media and the #hashtag is doing the same to the professional photographer.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world.

History will probably show that humans are the likely cause for the mass disappearance and death of honey bees around the world. “There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

“There are three kinds of lies: lies, damned lies, and statistics”, goes the adage popularized by author Mark Twain.

Artist Ai Weiwei has suffered at the hands of the Chinese authorities much more so than Andy Warhol’s brushes with surveillance from the FBI. Yet the two are remarkably similar: brash and polarizing views, distinctive art and creative processes, masterful self-promotion, savvy media manipulation and global ubiquity. This is all the more astounding given Ai Weiwei’s arrest, detentions and prohibition on travel outside of Beijing. He’s even made it to the Venice Biennale this year — only his art of course.

Artist Ai Weiwei has suffered at the hands of the Chinese authorities much more so than Andy Warhol’s brushes with surveillance from the FBI. Yet the two are remarkably similar: brash and polarizing views, distinctive art and creative processes, masterful self-promotion, savvy media manipulation and global ubiquity. This is all the more astounding given Ai Weiwei’s arrest, detentions and prohibition on travel outside of Beijing. He’s even made it to the Venice Biennale this year — only his art of course.