[tube]RfeaNKcffMk[/tube]

First, let me begin by introducing a quote for our times from David Bowie, dated 2003, published in Performing Songwriter.

“Fame itself, of course, doesn’t really afford you anything more than a good seat in a restaurant. That must be pretty well known by now. I’m just amazed how fame is being posited as the be all and end all, and how many of these young kids who are being foisted on the public have been talked into this idea that anything necessary to be famous is all right. It’s a sad state of affairs. However arrogant and ambitious I think we were in my generation, I think the idea was that if you do something really good, you’ll become famous. The emphasis on fame itself is something new. Now it’s, to be famous you should do what it takes, which is not the same thing at all. And it will leave many of them with this empty feeling.”

Thirteen years on, and just a few days following Bowie’s tragic death, his words on fame remain startlingly appropriate. We now live in a world where fame can be pursued, manufactured and curated without needing any particular talent — social media has seen to that.

This new type of fame — let’s call it insta-fame — is a very different kind of condition to our typical notion of old fame, which may be enabled by a gorgeous voice, or acting prowess, or a way with the written word, or prowess with a tennis racket, or at the wheel of a race car, or one a precipitous ski slope, or from walking on the surface of the Moon, or from winning the Spelling Bee, or from devising a cure for polio.

It’s easy to confuse insta-fame with old fame: both offer a huge following of adoring strangers and both, potentially, lead to inordinate monetary reward. But that’s where the similarities end. Old fame came from visible public recognition and required an achievement or a specific talent, usually honed after many years or decades. Insta-fame on the other hand doesn’t seem to demand any specific skill and is often pursued as an end in itself. With insta-fame the public recognition has become decoupled from the achievement — to such an extent, in fact, that it no longer requires any achievement or skill, other than the gathering of more public recognition. This is a gloriously self-sustaining circle that advertisers have grown to adore.

My diatribe leads to a fascinating article on the second type of fame, insta-fame, and some of its protagonists and victims. David Bowie’s words continue to ring true.

From the Independent:

Charlie Barker is in her pyjamas, sitting in the shared kitchen of her halls of residence, with an Asda shopping trolley next to her – storage overflow from her tiny room. A Flybe plane takes off from City Airport, just across the dank water from the University of East London, where Barker studies art in surroundings that could not be greyer. The only way out is the DLR, the driverless trains that link Docklands to the brighter parts of town.

“I always wanted to move to London and when everyone was signing up for uni, I was like, I don’t want to go to uni – I just want to go to London,” says Barker, who calls David Bowie her “spirit animal” and is obsessed with Hello Kitty. But going to London is hard if you’re 18 and from Nottingham and don’t have a plan or money. “So then I was like, OK, I’ll go to uni in London.” So she ended up in Beckton, which is closer to Essex than the city centre.

It’s lunchtime and one of Barker’s housemates walks in to stick something in the microwave, which he quickly takes back to his room. They exchange hellos. “I don’t really talk to people here, I just go to central to meet my friends,” she says. “But the DLR is so long and tragic, especially when you’re not in the brightest of moods.” I ask her if she often goes to the student canteen. I noticed it on the way here; it’s called “Munch”. She’s in her second year and says she didn’t know it existed.

These are unlikely surroundings, in some ways. Because while Barker is a nice, normal student doing normal student things, she’s also famous. I take out my phone and we look through her pictures on Instagram, where her following is greater than the combined circulations of Hello! and OK! magazines. Now @charliexbarker is in the room and things become more colourful. Pink, mainly. And blue, and glitter, and selfies, and skin.

And Hello Kitty. “I wanted to get a tattoo on the palm of my hand and because it was painful I was like, ‘what do I believe in enough to get tattooed on my hand for the rest of my life?’, and I was like – Hello Kitty. My Mum was like, ‘you freak!'” The drawing of the Japanese cartoon cat features in a couple of Barker’s 700-plus photos. In a portrait of her hand, she holds a pink and blue lollipop, and her fingernails are painted pink and blue. The caption: “Pink n blu pink n blu.”

Before that, Barker, now 19, wanted a tattoo saying “Drink water, eat pussy”, but decided against it. The slogan appears in another photo, scrawled on the pavement in pink chalk as she sits wearing a Betty Boop jacket in pink and black, with pink hair and fishnets. “I was bumming around with my friend Daniel, who’s a photographer, and I wanted to see if I could do all the styling and everything,” she says. “We’d already done four of five looks and we were like, oh my God, so we just wet my hair and went with it.”

“Poco esplicita,” suggests one of her Italian followers beside the photo. Barker rarely replies to comments these days, most of which are from fans (“I love uuuuu… Your style just killing me… IM SCREAMING”) and doesn’t say much in her captions (“I do wat I want” in this case). Yet her followers – 622,000 of them at the time of writing – love her pictures, many of which receive more than 50,000 likes. She’s not on reality TV, can’t sing and has no famous relatives. She’s not rich and has no access to private jets or tigers as pets. Yet with a photographic glimpse – or at least suggestion – of a life of colour and attitude, a student in Beckton has earned the sort of fame that only exists on Instagram.

“That sounds so weird, saying that, stop it!” she says when I ask if she feels famous. “No, I’m not famous. I’m just doing my own thing, getting recognition doing it. And I think everyone’s famous now, aren’t they? Everyone has an Instagram and everyone’s famous.”

The photo app, bought by Facebook in 2012, boomed last year, overtaking Twitter in September with 400 million active monthly users. But there are degrees of Instafame. And if one measure, beyond an audience, is a change to one’s life, then Barker has it. So too do Brian Whittaker (@brianhwhittaker) and Olivia Knight-Butler (@livrosekb), whose followings also defy celebrity norms. Whittaker, an insanely grown-up 16-year-old from Solihull, also rejects the idea that he’s famous at all, despite having a quarter of a million followers. “I don’t see followers as a real thing, it’s just being popular on a page,” he says from his mum’s house.

Yet in the next sentence he talks about the best indicator of fame in any age. “I get stopped in the street quite a bit now. In the summer I was in Singapore with my parents and people were taking pictures of me. One person stopped me and then when I got back to the hotel room I saw pictures of me on Instagram shopping. People had tagged me and were asking, ‘is this really you, are you in Singapore?'”

“I get so so flattered when people ask me for a picture in the street,” Barker says. Most of her fans are younger teenage girls. Many have set up dedicated Charlie Barker fan accounts, re-posting her images adorned with love hearts. They idolise her. “I feel like I have to give them eternal love for it, I’m like, oh my God, that is so sweet.”

Read the entire article here.

Video: Fame, David Bowie. Courtesy mudroll / Youtube.

Many political scholars, commentators and members of the public — of all political stripes — who remember

Many political scholars, commentators and members of the public — of all political stripes — who remember

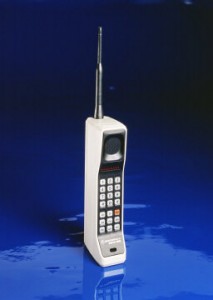

Businesses and brands come and they go. Seemingly unassailable corporations, often valued in the tens of billions of dollars (and sometimes more) fall to the incessant march of technological change and increasingly due to the ever fickle desires of the consumer.

Businesses and brands come and they go. Seemingly unassailable corporations, often valued in the tens of billions of dollars (and sometimes more) fall to the incessant march of technological change and increasingly due to the ever fickle desires of the consumer.

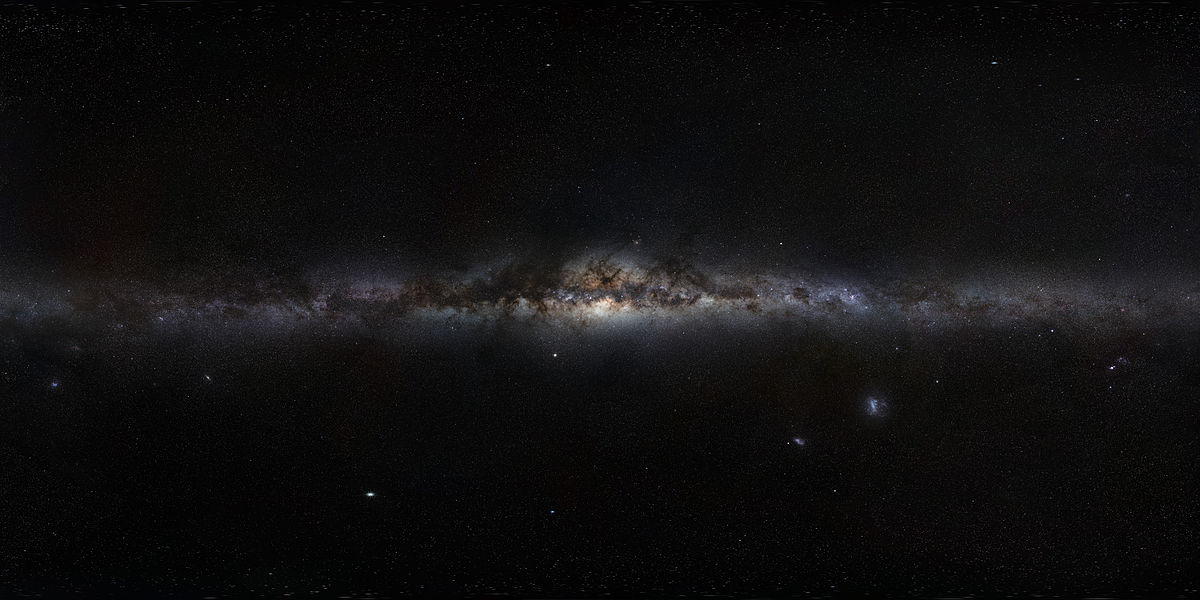

Ever had that curious tingling sensation at the back and base of your neck? Of course you have. Perhaps you’ve felt this sensation during a particular piece of music or from a watching a key scene in a movie or when taking in a panorama from the top of a mountain or from smelling a childhood aroma again. In fact, most people report having felt this sensation, albeit rather infrequently.

Ever had that curious tingling sensation at the back and base of your neck? Of course you have. Perhaps you’ve felt this sensation during a particular piece of music or from a watching a key scene in a movie or when taking in a panorama from the top of a mountain or from smelling a childhood aroma again. In fact, most people report having felt this sensation, albeit rather infrequently.

Is Nicolas Felton the Samuel Pepys of our digital age?

Is Nicolas Felton the Samuel Pepys of our digital age?