This NYT opinion piece has nailed it on the head. Pretending to be “stupid” to appeal to the “anti-elite” common man may well have been a good electoral strategy for Republican candidates over the last 50-60 years. Just cast your mind back to Sarah Palin as potential VP in 2008 and you’ll get my drift.

But now in 2016 we’ve entered uncharted territory: the country is on the verge of electing a shamefully ignorant man-child and he also happens to be a narcissistic psychopath with a wide range of extremely dangerous character flaws — and that’s putting it mildly.

Unfortunately for the US — and the world — the Republican nominee’s contempt for truth and reason, disdain for intellectual inquiry, and complete and utter ignorance is not an act. Though he does claim, “I know words. I have the best words. But there is no better word than stupid.”

But perhaps all is not lost: he does seem to have a sound grasp of how to acquire top-notch military and geopolitical insights — in his own (best) words, “I watch the shows.”

Welcome to the apocalypse my friends.

From the NYT:

It’s hard to know exactly when the Republican Party assumed the mantle of the “stupid party.”

Stupidity is not an accusation that could be hurled against such prominent early Republicans as Abraham Lincoln, Theodore Roosevelt, Elihu Root and Charles Evans Hughes. But by the 1950s, it had become an established shibboleth that the “eggheads” were for Adlai Stevenson and the “boobs” for Dwight D. Eisenhower — a view endorsed by Richard Hofstadter’s 1963 book “Anti-Intellectualism in American Life,” which contrasted Stevenson, “a politician of uncommon mind and style, whose appeal to intellectuals overshadowed anything in recent history,” with Eisenhower — “conventional in mind, relatively inarticulate.” The John F. Kennedy presidency, with its glittering court of Camelot, cemented the impression that it was the Democrats who represented the thinking men and women of America.

Rather than run away from the anti-intellectual label, Republicans embraced it for their own political purposes. In his “time for choosing” speech, Ronald Reagan said that the issue in the 1964 election was “whether we believe in our capacity for self-government or whether we abandon the American Revolution and confess that a little intellectual elite in a far-distant Capitol can plan our lives for us better than we can plan them ourselves.” Richard M. Nixon appealed to the “silent majority” and the “hard hats,” while his vice president, Spiro T. Agnew, issued slashing attacks on an “effete core of impudent snobs who characterize themselves as intellectuals.”

…

Many Democrats took all this at face value and congratulated themselves for being smarter than the benighted Republicans. Here’s the thing, though: The Republican embrace of anti-intellectualism was, to a large extent, a put-on. At least until now.

Eisenhower may have played the part of an amiable duffer, but he may have been the best prepared president we have ever had — a five-star general with an unparalleled knowledge of national security affairs. When he resorted to gobbledygook in public, it was in order to preserve his political room to maneuver. Reagan may have come across as a dumb thespian, but he spent decades honing his views on public policy and writing his own speeches. Nixon may have burned with resentment of “Harvard men,” but he turned over foreign policy and domestic policy to two Harvard professors, Henry A. Kissinger and Daniel Patrick Moynihan, while his own knowledge of foreign affairs was second only to Ike’s.

There is no evidence that Republican leaders have been demonstrably dumber than their Democratic counterparts. During the Reagan years, the G.O.P. briefly became known as the “party of ideas,” because it harvested so effectively the intellectual labor of conservative think tanks like the American Enterprise Institute and the Heritage Foundation and publications like The Wall Street Journal editorial page and Commentary. Scholarly policy makers like George P. Shultz, Jeane J. Kirkpatrick and Bill Bennett held prominent posts in the Reagan administration, a tradition that continued into the George W. Bush administration — amply stocked with the likes of Paul D. Wolfowitz, John J. Dilulio Jr. and Condoleezza Rice.

…

The trend has now culminated in the nomination of Donald J. Trump, a presidential candidate who truly is the know-nothing his Republican predecessors only pretended to be.

Mr. Trump doesn’t know the difference between the Quds Force and the Kurds. He can’t identify the nuclear triad, the American strategic nuclear arsenal’s delivery system. He had never heard of Brexit until a few weeks before the vote. He thinks the Constitution has 12 Articles rather than seven. He uses the vocabulary of a fifth grader. Most damning of all, he traffics in off-the-wall conspiracy theories by insinuating that President Obama was born in Kenya and that Ted Cruz’s father was involved in the Kennedy assassination. It is hardly surprising to read Tony Schwartz, the ghostwriter for Mr. Trump’s best seller “The Art of the Deal,” say, “I seriously doubt that Trump has ever read a book straight through in his adult life.”

Mr. Trump even appears proud of his lack of learning. He told The Washington Post that he reached decisions “with very little knowledge,” but on the strength of his “common sense” and his “business ability.” Reading long documents is a waste of time because of his rapid ability to get to the gist of an issue, he said: “I’m a very efficient guy.” What little Mr. Trump does know seems to come from television: Asked where he got military advice, he replied, “I watch the shows.”

Read the entire op/ed here.

If you’re one of the few earthlings wondering what Pokémon Go is all about, and how in the space of just a few days our neighborhoods have become overrun by zombie-like players,

If you’re one of the few earthlings wondering what Pokémon Go is all about, and how in the space of just a few days our neighborhoods have become overrun by zombie-like players,

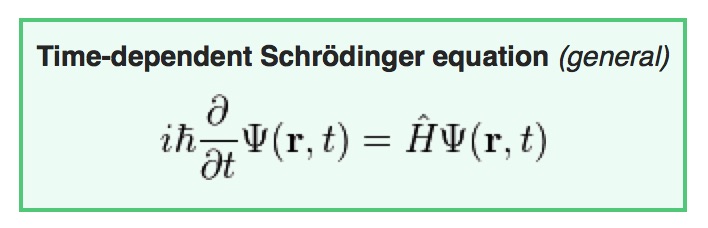

Once in every while I have to delve into the esoteric world of

Once in every while I have to delve into the esoteric world of

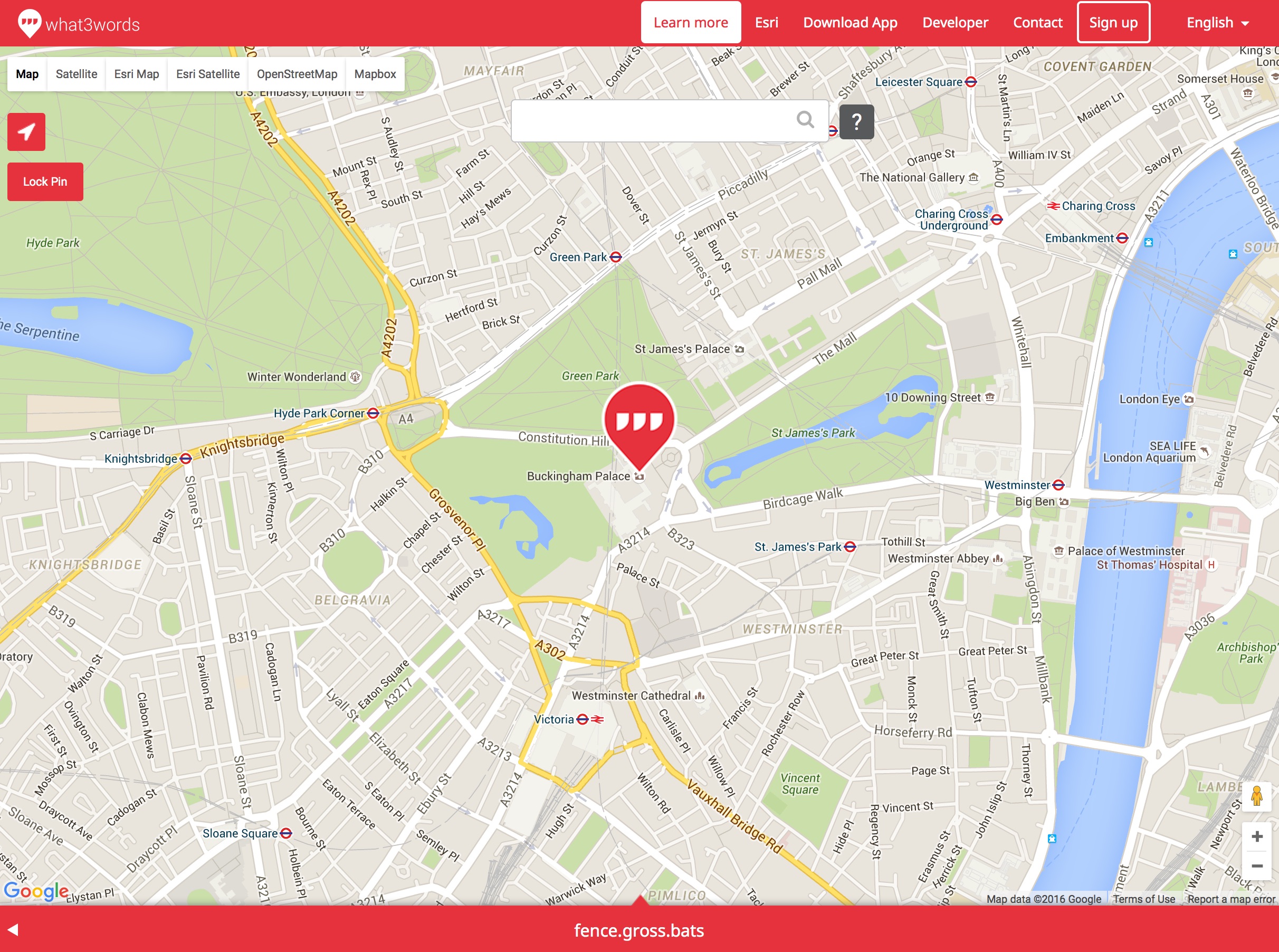

If you’ve read my blog for a while you undoubtedly know that I have a rather jaded view of tech startup culture — particularly with Silicon Valley’s myopic obsession for discovering the next multi-billion dollar mobile-consumer-facing-peer-to-peer-gig-economy-service-sharing-buzzword-laden-dating-platform-with-integrated-messaging-and-travel-meta-search app.

If you’ve read my blog for a while you undoubtedly know that I have a rather jaded view of tech startup culture — particularly with Silicon Valley’s myopic obsession for discovering the next multi-billion dollar mobile-consumer-facing-peer-to-peer-gig-economy-service-sharing-buzzword-laden-dating-platform-with-integrated-messaging-and-travel-meta-search app.