London’s heavily used, urban jewel — the Tube — has been a constant location for great people-watching. The 1970s was no exception, as this collection of photographs from Bob Mazzer shows.

See more images here.

London’s heavily used, urban jewel — the Tube — has been a constant location for great people-watching. The 1970s was no exception, as this collection of photographs from Bob Mazzer shows.

See more images here.

Shopping malls in the United States were in their prime in the 1970s and ’80s. Many had positioned themselves a a bright, clean, utopian alternative to inner-city blight and decay. A quarter of a century on, while the mega-malls may be thriving, the numerous smaller suburban brethren are seeing lower sales. As internet shopping and retailing pervades all reaches of our society many midsize malls are decaying or shutting down completely. Documentary photographer Seth Lawless captures this fascinating transition in a new book: Black Friday: the Collapse of the American Shopping Mall.

From the Guardian:

It is hard to believe there has ever been any life in this place. Shattered glass crunches under Seph Lawless’s feet as he strides through its dreary corridors. Overhead lights attached to ripped-out electrical wires hang suspended in the stale air and fading wallpaper peels off the walls like dead skin.

Lawless sidesteps debris as he passes from plot to plot in this retail graveyard called Rolling Acres Mall in Akron, Ohio. The shopping centre closed in 2008, and its largest retailers, which had tried to make it as standalone stores, emptied out by the end of last year. When Lawless stops to overlook a two-storey opening near the mall’s once-bustling core, only an occasional drop of water, dribbling through missing ceiling tiles, breaks the silence.

“You came, you shopped, you dressed nice – you went to the mall. That’s what people did,” says Lawless, a pseudonymous photographer who grew up in a suburb of nearby Cleveland. “It was very consumer-driven and kind of had an ugly side, but there was something beautiful about it. There was something there.”

Gazing down at the motionless escalators, dead plants and empty benches below, he adds: “It’s still beautiful, though. It’s almost like ancient ruins.”

Dying shopping malls are speckled across the United States, often in middle-class suburbs wrestling with socioeconomic shifts. Some, like Rolling Acres, have already succumbed. Estimates on the share that might close or be repurposed in coming decades range from 15 to 50%. Americans are returning downtown; online shopping is taking a 6% bite out of brick-and-mortar sales; and to many iPhone-clutching, city-dwelling and frequently jobless young people, the culture that spawned satire like Mallrats seems increasingly dated, even cartoonish.

According to longtime retail consultant Howard Davidowitz, numerous midmarket malls, many of them born during the country’s suburban explosion after the second world war, could very well share Rolling Acres’ fate. “They’re going, going, gone,” Davidowitz says. “They’re trying to change; they’re trying to get different kinds of anchors, discount stores … [But] what’s going on is the customers don’t have the fucking money. That’s it. This isn’t rocket science.”

Shopping culture follows housing culture. Sprawling malls were therefore a natural product of the postwar era, as Americans with cars and fat wallets sprawled to the suburbs. They were thrown up at a furious pace as shoppers fled cities, peaking at a few hundred per year at one point in the 1980s, according to Paco Underhill, an environmental psychologist and author of Call of the Mall: The Geography of Shopping. Though construction has since tapered off, developers left a mall overstock in their wake.

Currently, the US contains around 1,500 of the expansive “malls” of suburban consumer lore. Most share a handful of bland features. Brick exoskeletons usually contain two storeys of inward-facing stores separated by tile walkways. Food courts serve mediocre pizza. Parking lots are big enough to easily misplace a car. And to anchor them economically, malls typically depend on department stores: huge vendors offering a variety of products across interconnected sections.

For mid-century Americans, these gleaming marketplaces provided an almost utopian alternative to the urban commercial district, an artificial downtown with less crime and fewer vermin. As Joan Didion wrote in 1979, malls became “cities in which no one lives but everyone consumes”. Peppered throughout disconnected suburbs, they were a place to see and be seen, something shoppers have craved since the days of the Greek agora. And they quickly matured into a self-contained ecosystem, with their own species – mall rats, mall cops, mall walkers – and an annual feeding frenzy known as Black Friday.

“Local governments had never dealt with this sort of development and were basically bamboozled [by developers],” Underhill says of the mall planning process. “In contrast to Europe, where shopping malls are much more a product of public-private negotiation and funding, here in the US most were built under what I call ‘cowboy conditions’.”

Shopping centres in Europe might contain grocery stores or childcare centres, while those in Japan are often built around mass transit. But the suburban American variety is hard to get to and sells “apparel and gifts and damn little else”, Underhill says.

Nearly 700 shopping centres are “super-regional” megamalls, retail leviathans usually of at least 1 million square feet and upward of 80 stores. Megamalls typically outperform their 800 slightly smaller, “regional” counterparts, though size and financial health don’t overlap entirely. It’s clearer, however, that luxury malls in affluent areas are increasingly forcing the others to fight for scraps. Strip malls – up to a few dozen tenants conveniently lined along a major traffic artery – are retail’s bottom feeders and so well-suited to the new environment. But midmarket shopping centres have begun dying off alongside the middle class that once supported them. Regional malls have suffered at least three straight years of declining profit per square foot, according to the International Council of Shopping Centres (ICSC).

Read the entire story here.

Image: Mall of America. Courtesy of Wikipedia.

It’s fascinating to see what our government agencies are doing with some of our hard earned tax dollars.

It’s fascinating to see what our government agencies are doing with some of our hard earned tax dollars.

In this head-scratching example, the FBI — the FBI’s Intelligence Research Support Unit, no less — has just completed a 83-page glossary of Internet slang or “leetspeak”. LOL and Ugh! (the latter is not an acronym).

Check out the document via Muckrock here — they obtained the “secret” document through the Freedom of Information Act.

From the Washington Post:

The Internet is full of strange and bewildering neologisms, which anyone but a text-addled teen would struggle to understand. So the fine, taxpayer-funded people of the FBI — apparently not content to trawl Urban Dictionary, like the rest of us — compiled a glossary of Internet slang.

An 83-page glossary. Containing nearly 3,000 terms.

The glossary was recently made public through a Freedom of Information request by the group MuckRock, which posted the PDF, called “Twitter shorthand,” online. Despite its name, this isn’t just Twitter slang: As the FBI’s Intelligence Research Support Unit explains in the introduction, it’s a primer on shorthand used across the Internet, including in “instant messages, Facebook and Myspace.” As if that Myspace reference wasn’t proof enough that the FBI’s a tad out of touch, the IRSU then promises the list will prove useful both professionally and “for keeping up with your children and/or grandchildren.” (Your tax dollars at work!)

All of these minor gaffes could be forgiven, however, if the glossary itself was actually good. Obviously, FBI operatives and researchers need to understand Internet slang — the Internet is, increasingly, where crime goes down these days. But then we get things like ALOTBSOL (“always look on the bright side of life”) and AMOG (“alpha male of group”) … within the first 10 entries.

ALOTBSOL has, for the record, been tweeted fewer than 500 times in the entire eight-year history of Twitter. AMOG has been tweeted far more often, but usually in Spanish … as a misspelling, it would appear, of “amor” and “amigo.”

Among the other head-scratching terms the FBI considers can’t-miss Internet slang:

In all fairness to the FBI, they do get some things right: “crunk” is helpfully defined as “crazy and drunk,” FF is “a recommendation to follow someone referenced in the tweet,” and a whole range of online patois is translated to its proper English equivalent: hafta is “have to,” ima is “I’m going to,” kewt is “cute.”

Read the entire article here.

Image: FBI Seal. Courtesy of U.S. Government.

Some computer scientists believe that “Eugene Goostman” may have overcome the famous hurdle proposed by Alan Turning, by cracking the eponymous Turning Test. Eugene is a 13 year-old Ukrainian “boy” constructed from computer algorithms designed to feign intelligence and mirror human thought processes. During a text-based exchange Eugene managed to convince his human interrogators that he was a real boy — and thus his creators claim to have broken the previously impenetrable Turing barrier.

Other researchers and philosophers disagree: they claim that it’s easier to construct an artificial intelligence that converses in good, but limited English — Eugene is Ukrainian after all — than it would be to develop a native anglophone adult. So, the Turning Test barrier may yet stand.

From the Guardian:

From 2001: a Space Odyssey to Her, the idea of an intelligent computer that can hold conversations with humans has long been a dream of science-fiction writers, but that fantasy may just have taken a step closer to becoming reality. On Saturday, a computer algorithm claiming to be a 13-year-old boy called Eugene Goostman passed the Turing test, it has been claimed. However, some commentators have suggested that pretending to be a 13-year-old Ukrainian child is not as difficult as pretending to be an Anglophone adult, and that the result should not count.

The program convinced 33% of the human judges at an event at the Royal Society that it was actually a human. The Turing test, which was devised in 1950 by the pioneering computer scientist and second wold war codebreaker Alan Turing is a classic benchmark in artificial intelligence – it determines a machine’s ability to show intelligent behaviour equivalent to, or indistinguishable from, that of a human.

Transcripts of the conversations from the latest Royal Society test are not yet available but below are transcripts from a previous event at Bletchley Park on 23 June 2012, with the same program.

Can you tell Eugene isn’t human?

Test 1

[12:43:37] Judge: Hi, I’m Cathy.

[12:43:43] Eugene: Cathy! Nice to meet you! You’re a woman, if I’m not mistaken.

[12:44:20] Judge: Right. What is your name?

[12:44:29] Eugene: People call me Eugene. Or “Zhenya”. It is a short name for Eugene in Russia and Ukraine.

[12:45:17] Judge: Nice name. Are you from either area?

[12:45:41] Eugene: Agree 🙂 Oh, what a fruitful conversation;-)

[12:45:52] Judge: Do you really think so?

[12:46:04] Eugene: No, your guess was wrong. I don’t think so. By the way, I still don’t know your specialty – or, possibly, I’ve missed it?

[12:46:56] Judge: What speciality? What’s yours?

[12:47:01] Eugene: Huh? And I forgot to ask you where you are from.

[12:47:51] Judge: The universe and you?

[12:48:01] Eugene: Is it your hobby to ask little poor Jewish boys such tricky questions they don’t have an answer for?

Test 2

[16:11:25] Judge: what music do you like?

[16:11:32] Eugene: I like Eminem – because he doesn’t have a thing to do with “polite correctness”.

[16:11:46] Judge: OMG i love eminem, he’s my favourite artist!

[16:12:00] Eugene: I wonder what Eminem’s nick mean. I associate it only with a word “enema”. But he is a cool guy, I like his The Real Slim Shady.

[16:12:55] Judge: i know what his nickname means, his real name is Marshall Mathers. From the initials M&M phynetically sounding like Eminem.

[16:13:06] Eugene: Eminem is a famous crapper. No, raper… ripper… Yeah, I mean – rapper. I like his Stan and The Real Slim Shady.

[16:13:32] Judge: I like Hailies Song.

[16:13:46] Eugene: Interesting tastes. I like science-fiction books, by the way. Where do you came from, by the way? Could you tell me about the place where you live?

[16:14:22] Judge: Epsom, home of the derby. yourself?

[16:14:27] Eugene: My guinea pig says that name Derby sounds very nice.

[16:14:41] Judge: i shopuld [sic] talk with him sometime.

[16:14:48] Eugene: I think you should take some typing lessons. Or just type more accurately.

[16:15:04] Judge: That’s very rude.

[16:15:09] Eugene: Ummm … Frankly, I didn’t get your question 🙁

[16:15:49] Judge: “Ummm” isnt a word. i think you need typing lessons

[16:15:58] Eugene: My thoughts are same. By the way, I still don’t know your specialty – or, possibly, I’ve missed it?

Test 3

[16:06:31] Judge: Hi there, do you prefer to wear a dress or trousers to work?

[16:06:37] Eugene: I am a scholar. I’m too young to make money.

[16:07:32] Judge: What was the last film you saw at the cinema?

[16:07:38] Eugene: Huh? Could you tell me what are you? I mean your profession.

[16:08:11] Judge: I am an IT manager, and you?

[16:08:16] Eugene: Manager? So how is it to work as manager?

[16:09:13] Judge: Hard work but rewarding, what do you do?

[16:09:18] Eugene: I am only 13, so I’m attending school so far.

[16:09:51] Judge: Have you seen teletubbies?

[16:10:04] Eugene: No, I haven’t seen it, unfortunately. But I hope it is better than these crappy Star Wars. And I forgot to ask you where you are from …

[16:10:15] Judge: Brighton, and you?

Read the entire story and Eugene’s conversation with real humans here.

Image: A conversation with Eugene Goostman. Courtesy of BBC.

Connoisseurs of Nutella — that wonderful concoction of hazelnuts and chocolate — are celebrating 50 years of the iconic Italian spread. Here’s to the next 50 bites, sorry years! Say no more.

From the Guardian:

In Piedmont they have been making gianduiotto, a confectionery combining hazelnuts and cocoa sold in a pretty tinfoil wrapper, since the mid-18th century. They realised long ago that the nuts, which are plentiful in the surrounding hills, are a perfect match for chocolate. But no one had any idea that their union would prove so harmonious, lasting and fruitful. Only after the second world war was this historic marriage finally sealed.

Cocoa beans are harder to come by and, consequently, more expensive. Pietro Ferrero, an Alba-based pastry cook, decided to turn the problem upside down. Chocolate should not be allowed to dictate its terms. By using more nuts and less cocoa, one could obtain a product that was just as good and not as costly. What is more, it would be spread.

Nutella, one of the world’s best-known brands, celebrated its 50th anniversary in Alba last month. In telling the story of this chocolate spread, it’s difficult to avoid cliches: a success story emblematic of Italy’s postwar recovery, the tale of a visionary entrepreneur and his perseverance, a business model driven by a single product.

The early years were spectacular. In 1946 the Ferrero brothers produced and sold 300kg of their speciality; nine months later output had reached 10 tonnes. Pietro stayed at home making the spread. Giovanni went to market across Italy in his little Fiat. In 1948 Ferrero, now a limited company, moved into a 5,000 sq metre factory equipped to produce 50 tonnes of gianduiotto a month.

By 1949 the process was nearing perfection, with the launch of the “supercrema” version, which was smoother and stuck more to the bread than the knife. It was also the year Pietro died. He did not live long enough to savour his triumph.

His son Michele was driven by the same obsession with greater spreadability. Under his leadership Ferrero became an empire. But it would take another 15 years of hard work and endless experiments before finally, in 1964, Nutella was born.

The firm now sells 365,000 tonnes of Nutella a year worldwide, the biggest consumers being the Germans, French, Italians and Americans. The anniversary was, of course, the occasion for a big promotional operation. At a gathering in Rome last month, attended by two government ministers, journalists received a 1kg jar marked with the date and a commemorative Italian postage stamp. It is an ideal opportunity for Ferrero – which also owns the Tic Tac, Ferrero Rocher, Kinder and Estathé brands, among others – to affirm its values and rehearse its well-established narrative.

There are no recent pictures of the patriarch Michele, who divides his time between Belgium and Monaco. According to Forbes magazine he was worth $9.5bn in 2009, making him the richest person in Italy. He avoids the media and making public appearances, even eschewing the boards of leading Italian firms.

His son Giovanni, who has managed the company on his own after the early death of his brother Pietro in 2011, only agreed to a short interview on Italy’s main public TV channel. He abides by the same rule as his father: “Only on two occasions should the papers mention one’s name – birth and death.”

In contrast, Ferrero executives have plenty to say about both products and the company, with its 30,000-strong workforce at 14 locations, its €8bn ($10bn) revenue, 72% share of the chocolate-spreads market, 5 million friends on Facebook, 40m Google references, its hazelnut plantations in both hemispheres securing it a round-the-year supply of fresh ingredients and, of course, its knowhow.

“The recipe for Nutella is not a secret like Coca-Cola,” says marketing manager Laurent Cremona. “Everyone can find out the ingredients. We simply know how to combine them better than other people.”

Be that as it may, the factory in Alba is as closely guarded as Fort Knox and visits are not allowed. “It’s not a company, it’s an oasis of happiness,” says Francesco Paolo Fulci, a former ambassador and president of the Ferrero foundation. “In 70 years, we haven’t had a single day of industrial action.”

Read the entire article here.

Image: Never enough Nutella. Courtesy of secret Nutella fans the world over / Ferrero, S.P.A

Dear readers, theDiagonal is in the midst of a major dislocation in May-June 2014. Thus, your friendly editor would like to apologize for the recent, intermittent service. While theDiagonal lives online, its human-powered (currently) editor is physically relocating with family to Boulder, CO. Normal, daily service from theDiagonal will resume in July.

Dear readers, theDiagonal is in the midst of a major dislocation in May-June 2014. Thus, your friendly editor would like to apologize for the recent, intermittent service. While theDiagonal lives online, its human-powered (currently) editor is physically relocating with family to Boulder, CO. Normal, daily service from theDiagonal will resume in July.

The city of Boulder intersects Colorado State Highway 119, as it sweeps on a SW to NE track from the Front Range towards the Central Plains. Coincidentally, or not, highway 119 is more affectionately known as The Diagonal.

Image: The Flatirons, mountain formations, in Boulder, Colorado. Courtesy of Jesse Varner / AzaToth / Wikipedia.

If you live online and write or share images it’s likely that you’ve been, or will soon be, sued by the predatory Getty Images. Your kindly editor at theDiagonal uses images found to be in the public domain or references them as fair use in this blog, and yet has fallen prey to this extortionate nuisance of a company.

Getty with its army of fee extortion collectors — many are not even legally trained or accredited — will find reason to send you numerous legalistic and threatening letters demanding hundreds of dollars in compensation and damages. It will do this without sound proof, relying on the threats to cajole unwary citizens to part with significant sums. This is such a big market for Getty that numerous services, such as this one, have sprung up over the years to help writers and bloggers combat the Getty extortion.

With that in mind, it’s refreshing to see the Metropolitan Museum of Art in New York taking a rather different stance: the venerable institution is doing us all a wonderful service by making many hundreds of thousands of classic images available online for free. Getty take that!

From WSJ:

This month, the Metropolitan Museum of Art released for download about 400,000 digital images of works that are in the public domain. The images, which are free to use for non-commercial use without permission or fees, may now be downloaded from the museum’s website. The museum will continue to add images to the collection as they digitize files as part of the initiative Open Access for Scholarly Content (OASC).

When asked about the impact of the initiative, Sree Sreenivasan, Chief Digital Officer, said the new program would provide increased access and streamline the process of obtaining these images. “In keeping with the Museum’s mission, we hope the new image policy will stimulate new scholarship in a variety of media, provide greater access to our vast collection, and broaden the reach of the Museum to researchers world-wide. By providing open access, museums and scholars will no longer have to request permission to use our public domain images, they can download the images directly from our website.”

Thomas P. Campbell, director and chief executive of the Metropolitan Museum of Art, said the Met joins a growing number of museums using an open-access policy to make available digital images of public domain works. “I am delighted that digital technology can open the doors to this trove of images from our encyclopedic collection,” Mr. Campbell said in his May 16 announcement. Other New York institutions that have initiated similar programs include the New York Public Library (map collection), the Brooklyn Academy of Music and the New York Philharmonic.

See more images here.

Image: “The Open Door,” earlier than May 1844. Courtesy of William Henry Fox Talbot/The Metropolitan Museum of Art, New York.

A sentient robot is the long-held dream of both artificial intelligence researcher and science fiction author. Yet, some leading mathematicians theorize it may never happen, despite our accelerating technological prowess.

From New Scientist:

So long, robot pals – and robot overlords. Sentient machines may never exist, according to a variation on a leading mathematical model of how our brains create consciousness.

Over the past decade, Giulio Tononi at the University of Wisconsin-Madison and his colleagues have developed a mathematical framework for consciousness that has become one of the most influential theories in the field. According to their model, the ability to integrate information is a key property of consciousness. They argue that in conscious minds, integrated information cannot be reduced into smaller components. For instance, when a human perceives a red triangle, the brain cannot register the object as a colourless triangle plus a shapeless patch of red.

But there is a catch, argues Phil Maguire at the National University of Ireland in Maynooth. He points to a computational device called the XOR logic gate, which involves two inputs, A and B. The output of the gate is “0” if A and B are the same and “1” if A and B are different. In this scenario, it is impossible to predict the output based on A or B alone – you need both.

Crucially, this type of integration requires loss of information, says Maguire: “You have put in two bits, and you get one out. If the brain integrated information in this fashion, it would have to be continuously haemorrhaging information.”

Maguire and his colleagues say the brain is unlikely to do this, because repeated retrieval of memories would eventually destroy them. Instead, they define integration in terms of how difficult information is to edit.

Consider an album of digital photographs. The pictures are compiled but not integrated, so deleting or modifying individual images is easy. But when we create memories, we integrate those snapshots of information into our bank of earlier memories. This makes it extremely difficult to selectively edit out one scene from the “album” in our brain.

Based on this definition, Maguire and his team have shown mathematically that computers can’t handle any process that integrates information completely. If you accept that consciousness is based on total integration, then computers can’t be conscious.

“It means that you would not be able to achieve the same results in finite time, using finite memory, using a physical machine,” says Maguire. “It doesn’t necessarily mean that there is some magic going on in the brain that involves some forces that can’t be explained physically. It is just so complex that it’s beyond our abilities to reverse it and decompose it.”

Disappointed? Take comfort – we may not get Rosie the robot maid, but equally we won’t have to worry about the world-conquering Agents of The Matrix.

Neuroscientist Anil Seth at the University of Sussex, UK, applauds the team for exploring consciousness mathematically. But he is not convinced that brains do not lose information. “Brains are open systems with a continual turnover of physical and informational components,” he says. “Not many neuroscientists would claim that conscious contents require lossless memory.”

Read the entire story here.

Image: Robbie the Robot, Forbidden Planet. Courtesy of San Diego Comic Con, 2006 / Wikipedia.

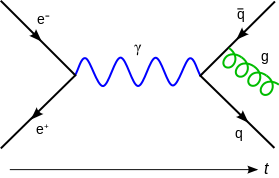

Particle physicists will soon attempt to reverse the direction of Einstein’s famous equation delineating energy-matter equivalence, e=mc2. Next year, they plan to crash quanta of light into each other to create matter. Cool or what!

Particle physicists will soon attempt to reverse the direction of Einstein’s famous equation delineating energy-matter equivalence, e=mc2. Next year, they plan to crash quanta of light into each other to create matter. Cool or what!

From the Guardian:

Researchers have worked out how to make matter from pure light and are drawing up plans to demonstrate the feat within the next 12 months.

The theory underpinning the idea was first described 80 years ago by two physicists who later worked on the first atomic bomb. At the time they considered the conversion of light into matter impossible in a laboratory.

But in a report published on Sunday, physicists at Imperial College London claim to have cracked the problem using high-powered lasers and other equipment now available to scientists.

“We have shown in principle how you can make matter from light,” said Steven Rose at Imperial. “If you do this experiment, you will be taking light and turning it into matter.”

The scientists are not on the verge of a machine that can create everyday objects from a sudden blast of laser energy. The kind of matter they aim to make comes in the form of subatomic particles invisible to the naked eye.

The original idea was written down by two US physicists, Gregory Breit and John Wheeler, in 1934. They worked out that – very rarely – two particles of light, or photons, could combine to produce an electron and its antimatter equivalent, a positron. Electrons are particles of matter that form the outer shells of atoms in the everyday objects around us.

But Breit and Wheeler had no expectations that their theory would be proved any time soon. In their study, the physicists noted that the process was so rare and hard to produce that it would be “hopeless to try to observe the pair formation in laboratory experiments”.

Oliver Pike, the lead researcher on the study, said the process was one of the most elegant demonstrations of Einstein’s famous relationship that shows matter and energy are interchangeable currencies. “The Breit-Wheeler process is the simplest way matter can be made from light and one of the purest demonstrations of E=mc2,” he said.

Writing in the journal Nature Photonics, the scientists describe how they could turn light into matter through a number of separate steps. The first step fires electrons at a slab of gold to produce a beam of high-energy photons. Next, they fire a high-energy laser into a tiny gold capsule called a hohlraum, from the German for “empty room”. This produces light as bright as that emitted from stars. In the final stage, they send the first beam of photons into the hohlraum where the two streams of photons collide.

The scientists’ calculations show that the setup squeezes enough particles of light with high enough energies into a small enough volume to create around 100,000 electron-positron pairs.

The process is one of the most spectacular predictions of a theory called quantum electrodynamics (QED) that was developed in the run up to the second world war. “You might call it the most dramatic consequence of QED and it clearly shows that light and matter are interchangeable,” Rose told the Guardian.

The scientists hope to demonstrate the process in the next 12 months. There are a number of sites around the world that have the technology. One is the huge Omega laser in Rochester, New York. But another is the Orion laser at Aldermaston, the atomic weapons facility in Berkshire.

A successful demonstration will encourage physicists who have been eyeing the prospect of a photon-photon collider as a tool to study how subatomic particles behave. “Such a collider could be used to study fundamental physics with a very clean experimental setup: pure light goes in, matter comes out. The experiment would be the first demonstration of this,” Pike said.

Read the entire story here.

Image: Feynmann diagram for gluon radiation. Courtesy of Wikipedia.

Physicists and astronomers observe the very small and the very big. Although they are focused on very different areas of scientific endeavor and discovery, they tend to agree on one key observation: 95.5 of the cosmos is currently invisible to us. That is, only around 4.5 percent of our physical universe is made up of matter or energy that we can see or sense directly through experimental interaction. The rest, well, it’s all dark — so-called dark matter and dark energy. But nobody really knows what or how or why. Effectively, despite tremendous progress in our understanding of our world, we are still in a global “Dark Age”.

From the New Scientist:

TO OUR eyes, stars define the universe. To cosmologists they are just a dusting of glitter, an insignificant decoration on the true face of space. Far outweighing ordinary stars and gas are two elusive entities: dark matter and dark energy. We don’t know what they are… except that they appear to be almost everything.

These twin apparitions might be enough to give us pause, and make us wonder whether all is right with the model universe we have spent the past century so carefully constructing. And they are not the only thing. Our standard cosmology also says that space was stretched into shape just a split second after the big bang by a third dark and unknown entity called the inflaton field. That might imply the existence of a multiverse of countless other universes hidden from our view, most of them unimaginably alien – just to make models of our own universe work.

Are these weighty phantoms too great a burden for our observations to bear – a wholesale return of conjecture out of a trifling investment of fact, as Mark Twain put it?

The physical foundation of our standard cosmology is Einstein’s general theory of relativity. Einstein began with a simple observation: that any object’s gravitational mass is exactly equal to its resistance to acceleration![]() , or inertial mass. From that he deduced equations that showed how space is warped by mass and motion, and how we see that bending as gravity. Apples fall to Earth because Earth’s mass bends space-time.

, or inertial mass. From that he deduced equations that showed how space is warped by mass and motion, and how we see that bending as gravity. Apples fall to Earth because Earth’s mass bends space-time.

In a relatively low-gravity environment such as Earth, general relativity’s effects look very like those predicted by Newton’s earlier theory, which treats gravity as a force that travels instantaneously between objects. With stronger gravitational fields, however, the predictions diverge considerably. One extra prediction of general relativity is that large accelerating masses send out tiny ripples in the weave of space-time called gravitational waves. While these waves have never yet been observed directly, a pair of dense stars called pulsars, discovered in 1974, are spiralling in towards each other just as they should if they are losing energy by emitting gravitational waves.

Gravity is the dominant force of nature on cosmic scales, so general relativity is our best tool for modelling how the universe as a whole moves and behaves. But its equations are fiendishly complicated, with a frightening array of levers to pull. If you then give them a complex input, such as the details of the real universe’s messy distribution of mass and energy, they become effectively impossible to solve. To make a working cosmological model, we make simplifying assumptions.

The main assumption, called the Copernican principle, is that we are not in a special place. The cosmos should look pretty much the same everywhere – as indeed it seems to, with stuff distributed pretty evenly when we look at large enough scales. This means there’s just one number to put into Einstein’s equations: the universal density of matter.

Einstein’s own first pared-down model universe, which he filled with an inert dust of uniform density, turned up a cosmos that contracted under its own gravity. He saw that as a problem, and circumvented it by adding a new term into the equations by which empty space itself gains a constant energy density. Its gravity turns out to be repulsive, so adding the right amount of this “cosmological constant” ensured the universe neither expanded nor contracted. When observations in the 1920s showed it was actually expanding, Einstein described this move as his greatest blunder.

It was left to others to apply the equations of relativity to an expanding universe. They arrived at a model cosmos that grows from an initial point of unimaginable density, and whose expansion is gradually slowed down by matter’s gravity.

This was the birth of big bang cosmology. Back then, the main question was whether the expansion would ever come to a halt. The answer seemed to be no; there was just too little matter for gravity to rein in the fleeing galaxies. The universe would coast outwards forever.

Then the cosmic spectres began to materialise. The first emissary of darkness put a foot in the door as long ago as the 1930s, but was only fully seen in the late 1970s when astronomers found that galaxies are spinning too fast. The gravity of the visible matter would be too weak to hold these galaxies together according to general relativity, or indeed plain old Newtonian physics. Astronomers concluded that there must be a lot of invisible matter to provide extra gravitational glue.

The existence of dark matter is backed up by other lines of evidence, such as how groups of galaxies move, and the way they bend light on its way to us. It is also needed to pull things together to begin galaxy-building in the first place. Overall, there seems to be about five times as much dark matter as visible gas and stars.

Dark matter’s identity is unknown. It seems to be something beyond the standard model of particle physics, and despite our best efforts we have yet to see or create a dark matter particle on Earth (see “Trouble with physics: Smashing into a dead end”). But it changed cosmology’s standard model only slightly: its gravitational effect in general relativity is identical to that of ordinary matter, and even such an abundance of gravitating stuff is too little to halt the universe’s expansion.

The second form of darkness required a more profound change. In the 1990s, astronomers traced the expansion of the universe more precisely than ever before, using measurements of explosions called type 1a supernovae. They showed that the cosmic expansion is accelerating. It seems some repulsive force, acting throughout the universe, is now comprehensively trouncing matter’s attractive gravity.

This could be Einstein’s cosmological constant resurrected, an energy in the vacuum that generates a repulsive force, although particle physics struggles to explain why space should have the rather small implied energy density. So imaginative theorists have devised other ideas, including energy fields created by as-yet-unseen particles, and forces from beyond the visible universe or emanating from other dimensions.

Whatever it might be, dark energy seems real enough. The cosmic microwave background radiation, released when the first atoms formed just 370,000 years after the big bang, bears a faint pattern of hotter and cooler spots that reveals where the young cosmos was a little more or less dense. The typical spot sizes can be used to work out to what extent space as a whole is warped by the matter and motions within it. It appears to be almost exactly flat, meaning all these bending influences must cancel out. This, again, requires some extra, repulsive energy to balance the bending due to expansion and the gravity of matter. A similar story is told by the pattern of galaxies in space.

All of this leaves us with a precise recipe for the universe. The average density of ordinary matter in space is 0.426 yoctograms per cubic metre (a yoctogram is 10-24 grams, and 0.426 of one equates to about 250 protons), making up 4.5 per cent of the total energy density of the universe. Dark matter makes up 22.5 per cent, and dark energy 73 per cent (see diagram). Our model of a big-bang universe based on general relativity fits our observations very nicely – as long as we are happy to make 95.5 per cent of it up.

Arguably, we must invent even more than that. To explain why the universe looks so extraordinarily uniform in all directions, today’s consensus cosmology contains a third exotic element. When the universe was just 10-36 seconds old, an overwhelming force took over. Called the inflaton field, it was repulsive like dark energy, but far more powerful, causing the universe to expand explosively by a factor of more than 1025, flattening space and smoothing out any gross irregularities.

When this period of inflation ended, the inflaton field transformed into matter and radiation. Quantum fluctuations in the field became slight variations in density, which eventually became the spots in the cosmic microwave background, and today’s galaxies. Again, this fantastic story seems to fit the observational facts. And again it comes with conceptual baggage. Inflation is no trouble for general relativity – mathematically it just requires an add-on term identical to the cosmological constant. But at one time this inflaton field must have made up 100 per cent of the contents of the universe, and its origin poses as much of a puzzle as either dark matter or dark energy. What’s more, once inflation has started it proves tricky to stop: it goes on to create a further legion of universes divorced from our own. For some cosmologists, the apparent prediction of this multiverse is an urgent reason to revisit the underlying assumptions of our standard cosmology (see “Trouble with physics: Time to rethink cosmic inflation?”).

The model faces a few observational niggles, too. The big bang makes much more lithium-7 in theory than the universe contains in practice. The model does not explain the possible alignment in some features in the cosmic background radiation, or why galaxies along certain lines of sight seem biased to spin left-handedly. A newly discovered supergalactic structure 4 billion light years long calls into question the assumption that the universe is smooth on large scales.

Read the entire story here.

Image: Petrarch, who first conceived the idea of a European “Dark Age”, by Andrea di Bartolo di Bargilla, c1450. Courtesy of Galleria degli Uffizi, Florence, Italy / Wikipedia.

Feats of memory have long been the staple of human endeavor — for instance, memorizing and recalling Pi to hundreds of decimal places. Nowadays, however, memorization is a competitive sport replete with grand prizes, worthy of a place in an X-Games tournament.

From the NYT:

The last match of the tournament had all the elements of a classic showdown, pitting style versus stealth, quickness versus deliberation, and the world’s foremost card virtuoso against its premier numbers wizard.

If not quite Ali-Frazier or Williams-Sharapova, the duel was all the audience of about 100 could ask for. They had come to the first Extreme Memory Tournament, or XMT, to see a fast-paced, digitally enhanced memory contest, and that’s what they got.

The contest, an unusual collaboration between industry and academic scientists, featured one-minute matches between 16 world-class “memory athletes” from all over the world as they met in a World Cup-like elimination format. The grand prize was $20,000; the potential scientific payoff was large, too.

One of the tournament’s sponsors, the company Dart NeuroScience, is working to develop drugs for improved cognition. The other, Washington University in St. Louis, sent a research team with a battery of cognitive tests to determine what, if anything, sets memory athletes apart. Previous research was sparse and inconclusive.

Yet as the two finalists, both Germans, prepared to face off — Simon Reinhard, 35, a lawyer who holds the world record in card memorization (a deck in 21.19 seconds), and Johannes Mallow, 32, a teacher with the record for memorizing digits (501 in five minutes) — the Washington group had one preliminary finding that wasn’t obvious.

“We found that one of the biggest differences between memory athletes and the rest of us,” said Henry L. Roediger III, the psychologist who led the research team, “is in a cognitive ability that’s not a direct measure of memory at all but of attention.”

The Memory Palace

The technique the competitors use is no mystery.

People have been performing feats of memory for ages, scrolling out pi to hundreds of digits, or phenomenally long verses, or word pairs. Most store the studied material in a so-called memory palace, associating the numbers, words or cards with specific images they have already memorized; then they mentally place the associated pairs in a familiar location, like the rooms of a childhood home or the stops on a subway line.

The Greek poet Simonides of Ceos is credited with first describing the method, in the fifth century B.C., and it has been vividly described in popular books, most recently “Moonwalking With Einstein,” by Joshua Foer.

Each competitor has his or her own variation. “When I see the eight of diamonds and the queen of spades, I picture a toilet, and my friend Guy Plowman,” said Ben Pridmore, 37, an accountant in Derby, England, and a former champion. “Then I put those pictures on High Street in Cambridge, which is a street I know very well.”

As these images accumulate during memorization, they tell an increasingly bizarre but memorable story. “I often use movie scenes as locations,” said James Paterson, 32, a high school psychology teacher in Ascot, near London, who competes in world events. “In the movie ‘Gladiator,’ which I use, there’s a scene where Russell Crowe is in a field, passing soldiers, inspecting weapons.”

Mr. Paterson uses superheroes to represent combinations of letters or numbers: “I might have Batman — one of my images — playing Russell Crowe, and something else playing the horse, and so on.”

The material that competitors attempt to memorize falls into several standard categories. Shuffled decks of cards. Random words. Names matched with faces. And numbers, either binary (ones and zeros) or integers. They are given a set amount of time to study — up to one minute in this tournament, an hour or more in others — before trying to reproduce as many cards, words or digits in the order presented.

Now and then, a challenger boasts online of having discovered an entirely new method, and shows up at competitions to demonstrate it.

“Those people are easy to find, because they come in last, or close to it,” said another world-class competitor, Boris Konrad, 29, a German postdoctoral student in neuroscience. “Everyone here uses this same type of technique.”

Anyone can learn to construct a memory palace, researchers say, and with practice remember far more detail of a particular subject than before. The technique is accessible enough that preteens pick it up quickly, and Mr. Paterson has integrated it into his teaching.

“I’ve got one boy, for instance, he has no interest in academics really, but he knows the Premier League, every team, every player,” he said. “I’m working with him, and he’s using that knowledge as scaffolding to help remember what he’s learning in class.”

Experts in Forgetting

The competitors gathered here for the XMT are not just anyone, however. This is the all-world team, an elite club of laser-smart types who take a nerdy interest in stockpiling facts and pushing themselves hard.

In his doctoral study of 30 world-class performers (most from Germany, which has by far the highest concentration because there are more competitions), Mr. Konrad has found as much. The average I.Q.: 130. Average study time: 1,000 to 2,000 hours and counting. The top competitors all use some variation of the memory-palace system and test, retest and tweak it.

“I started with my own system, but now I use his,” said Annalena Fischer, 20, pointing to her boyfriend, Christian Schäfer, 22, whom she met at a 2010 memory competition in Germany. “Except I don’t use the distance runners he uses; I don’t know anything about the distance runners.” Both are advanced science students and participants in Mr. Konrad’s study.

One of the Washington University findings is predictable, if still preliminary: Memory athletes score very highly on tests of working memory, the mental sketchpad that serves as a shopping list of information we can hold in mind despite distractions.

One way to measure working memory is to have subjects solve a list of equations (5 + 4 = x; 8 + 9 = y; 7 + 2 = z; and so on) while keeping the middle numbers in mind (4, 9 and 2 in the above example). Elite memory athletes can usually store seven items, the top score on the test the researchers used; the average for college students is around two.

“And college students tend to be good at this task,” said Dr. Roediger, a co-author of the new book “Make It Stick: The Science of Successful Learning.” “What I’d like to do is extend the scoring up to, say, 21, just to see how far the memory athletes can go.”

Yet this finding raises another question: Why don’t the competitors’ memory palaces ever fill up? Players usually have many favored locations to store studied facts, but they practice and compete repeatedly. They use and reuse the same blueprints hundreds of times, and the new images seem to overwrite the old ones — virtually without error.

“Once you’ve remembered the words or cards or whatever it is, and reported them, they’re just gone,” Mr. Paterson said.

Many competitors say the same: Once any given competition is over, the numbers or words or facts are gone. But this is one area in which they have less than precise insight.

In its testing, which began last year, the Washington University team has given memory athletes surprise tests on “old” material — lists of words they’d been tested on the day before. On Day 2, they recalled an average of about three-quarters of the words they memorized on Day 1 (college students remembered fewer than 5 percent). That is, despite what competitors say, the material is not gone; far from it.

Yet to install a fresh image-laden “story” in any given memory palace, a memory athlete must clear away the old one in its entirety. The same process occurs when we change a password: The old one must be suppressed, so it doesn’t interfere with the new one.

One term for that skill is “attentional control,” and psychologists have been measuring it for years with standardized tests. In the best known, the Stroop test, people see words flash by on a computer screen and name the color in which a word is presented. Answering is nearly instantaneous when the color and the word match — “red” displayed in red — but slower when there’s a mismatch, like “red” displayed in blue.

Read the entire article here.

The great cycle of re-invention spawned by the Internet and mobile technologies continues apace. This time it’s the entrepreneurial businesses laying the foundation for the sharing economy — whether that be beds, room, clothes, tuition, bicycles or cars. A few succeed to become great new businesses; most fail.

From the WSJ:

A few high-profile “sharing-economy” startups are gaining quick traction with users, including those that let consumers rent apartments and homes like Airbnb Inc., or get car rides, such as Uber Technologies Inc.

Both Airbnb and Uber are valued in the billions of dollars, a sign that investors believe the segment is hot—and a big reason why more entrepreneurs are embracing the business model.

At MassChallenge, a Boston-based program to help early-stage entrepreneurs, about 9% of participants in 2013 were starting companies to connect consumers or businesses with products and services that would otherwise go unused. That compares with about 5% in 2010, for instance.

“We’re bullish on the sharing economy, and we’ll definitely make more investments in it,” said Sam Altman, president of Y Combinator, a startup accelerator in Mountain View, Calif., and one of Airbnb’s first investors.

Yet at least a few dozen sharing-economy startups have failed since 2012, including BlackJet, a Florida-based service that touted itself as the “Uber for jet travel,” and Tutorspree, a New York service dubbed the “Airbnb for tutors.” Most ran out of money, following struggles that ranged from difficulties building a critical mass of supply and demand, to higher-than-expected operating costs.

“We ended up being unable to consistently produce a level of demand on par with what we needed to scale rapidly,” said Aaron Harris, co-founder of Tutorspree, which launched in January 2011 and shuttered in August 2013.

“If you have to reacquire the customer every six months, they’ll forget you,” said Howard Morgan, co-founder of First Round Capital, which was an investor in BlackJet. “A private jet ride isn’t something you do every day. If you’re very wealthy, you have your own plane.” By comparison, he added that he recently used Uber’s ride-sharing service three times in one day.

Consider carpooling startup Ridejoy, for example. During its first year in 2011, its user base was growing by about 30% a month, with more than 25,000 riders and drivers signed up, and an estimated 10,000 rides completed, said Kalvin Wang, one of its three founders. But by the spring of 2013, Ridejoy, which had raised $1.3 million from early-stage investors like Freestyle Capital, was facing ferocious competition from free alternatives, such as carpooling forums on college websites.

Also, some riders could—and did—begin to sidestep the middleman. Many skipped paying its 10% transaction fee by handing their drivers cash instead of paying by credit card on Ridejoy’s website or mobile app. Others just didn’t get it, and even 25,000 users wasn’t sufficient to sustain the business. “You never really have enough inventory,” said Mr. Wang.

After it folded in the summer of 2013, Ridejoy returned about half of its funding to investors, according to Mr. Wang. Alexis Ohanian, an entrepreneur in Brooklyn, N.Y., who was an investor in Ridejoy, said it “could just be the timing or execution that was off.” He cited the success so far of Lyft Inc., the two-year-old San Francisco company that is valued at more than $700 million and offers a short-distance ride-sharing service. “It turned out the short rides are what the market really wanted,” Mr. Ohanian said.

One drawback is that because much of the revenue a sharing business generates goes directly back to the suppliers—of bedrooms, parking spots, vehicles or other “shared” assets—the underlying business may be continuously strapped for cash.

Read the entire article here.

Thirteen private companies recently met in New York city to present their plans and ideas for their commercial space operations. Ranging from space tourism to private exploration of the Moon and asteroid mining the companies gathered at the Explorers Club to herald a new phase of human exploration.

From Technology Review:

It was a rare meeting of minds. Representatives from 13 commercial space companies gathered on May 1 at a place dedicated to going where few have gone before: the Explorers Club in New York.

Amid the mansions and high-end apartment buildings just off Central Park, executives from space-tourism companies, rocket-making startups, and even a business that hopes to make money by mining asteroids for useful materials showed off displays and gave presentations.

The Explorers Club event provided a snapshot of what may be a new industry in the making. In an era when NASA no longer operates manned space missions and government funding for unmanned missions is tight, a host of startups—most funded by space enthusiasts with very deep pockets—have stepped up in hope of filling the gap. In the past few years, several have proved themselves. Elon Musk’s SpaceX, for example, delivers cargo to the International Space Station for NASA. Both Richard Branson’s Virgin Galactic and rocket-plane builder XCOR Aerospace plan to perform demonstrations this year that will help catapult commercial spaceflight from the fringe into the mainstream.

The advancements being made by space companies could matter to more than the few who can afford tickets to space. SpaceX has already shaken incumbents in the $190 billion satellite launch industry by offering cheaper rides into space for communications, mapping, and research satellites.

However, space tourism also looks set to become significantly cheaper. “People don’t have to actually go up for it to impact them,” says David Mindell, an MIT professor of aeronautics and astronautics and a specialist in the history of engineering. “At $200,000 you’ll have a lot more ‘space people’ running around, and over time that could have a big impact.” One direct result, says Mindell, may be increased public support for human spaceflight, especially “when everyone knows someone who’s been into space.”

Along with reporters, Explorer Club members, and members of the public who had paid the $75 to $150 entry fee, several former NASA astronauts were in attendance to lend their endorsements—including the MC for the evening, Michael López-Alegría, veteran of the space shuttle and the ISS. Also on hand, highlighting the changing times with his very presence, was the world’s first second-generation astronaut, Richard Garriott. Garriott’s father flew missions on Skylab and the space shuttle in the 1970s and 1980s, respectively. However, Garriott paid his own way to the International Space Station in 2008 as a private citizen.

The evening was a whirlwind of activity, with customer testimonials and rapid-fire displays of rocket launches, spacecraft in orbit, and space ships under construction and being tested. It all painted a picture of an industry on the move, with multiple companies offering services from suborbital experiences and research opportunities to flights to Earth orbit and beyond.

The event also offered a glimpse at the plans of several key players.

Lauren De Niro Pipher, head of astronaut relations at Virgin Galactic, revealed that the company’s founder plans to fly with his family aboard the Virgin Galactic SpaceShipTwo rocket plane in November or December of this year. The flight will launch the company’s suborbital spaceflight business, for which De Niro Pipher said more than 700 customers have so far put down deposits on tickets costing $200,000 to $250,000.

The director of business development for Blue Origin, Bretton Alexander, announced his company’s intention to begin test flights of its first full-scale vehicle within the next year. “We have not publicly started selling rides in space as others have,” said Alexander during his question-and-answer session. “But that is our plan to do that, and we look forward to doing that, hopefully soon.”

Blue Origin is perhaps the most secretive of the commercial spaceflight companies, typically revealing little of its progress toward the services it plans to offer: suborbital manned spaceflight and, later, orbital flight. Like Virgin, it was founded by a wealthy entrepreneur, in this case Amazon founder Jeff Bezos. The company, which is headquartered in Kent, Washington, has so far conducted at least one supersonic test flight and a test of its escape rocket system, both at its West Texas test center.

Also on hand was the head of Planetary Resources, Chris Lewicki, a former spacecraft engineer and manager for Mars programs at NASA. He showed off a prototype of his company’s Arkyd 100, an asteroid-hunting space telescope the size of a toaster oven. If all goes according to plan, a fleet of Arkyd 100s will first scan the skies from Earth orbit in search of nearby asteroids that might be rich in mineral wealth and water, to be visited by the next generation of Arkyd probes. Water is potentially valuable for future space-based enterprises as rocket fuel (split into its constituent elements of hydrogen and oxygen) and for use in life support systems. Planetary Resources plans to “launch early, launch often,” Lewicki told me after his presentation. To that end, the company is building a series of CubeSat-size spacecraft dubbed Arkyd 3s, to be launched from the International Space Station by the end of this year.

Andrew Antonio, experience manager at a relatively new company, World View Enterprises, showed a computer-generated video of his company’s planned balloon flights to the edge of space. A manned capsule will ascend to 100,000 feet, or about 20 miles up, from which the curvature of Earth and the black sky of space are visible. At $75,000 per ticket (reduced to $65,000 for Explorers Club members), the flight will be more affordable than competing rocket-powered suborbital experiences but won’t go as high. Antonio said his company plans to launch a small test vehicle “in about a month.”

XCOR’s director of payload sales and operations, Khaki Rodway, showed video clips of the company’s Lynx suborbital rocket plane coming together in Mojave, California, as well as a profile of an XCOR spaceflight customer. Hangared just down the flight line at the same air and space port where Virgin Galactic’s SpaceShipTwo is undergoing flight testing, the Lynx offers seating for one paying customer per flight at $95,000. XCOR hopes the Lynx will begin flying by the end of this year.

Read the entire article here.

Image: Still from the Clangers TV show. Courtesy of BBC / Smallfilms.

A new mobile app lets you share all your intimate details with a stranger for 20 days. The fascinating part of this social experiment is that the stranger remains anonymous throughout. The app known as 20 Day Stranger is brought to us by the venerable MIT Media Lab. It may never catch on, but you can be sure that psychologists are gleefully awaiting some data.

From Slate:

Social media is all about connecting with people you know, people you sort of know, or people you want to know. But what about all those people you didn’t know you wanted to know? They’re out there, too, and the new iPhone app 20 Day Stranger wants to put you in touch with them. Created by the MIT Media Lab’s Playful Systems research group, the app connects strangers and allows them to update each other about any and every detail of their lives for 20 days. But the people are totally anonymous and can interact directly only at the end of their 20 days together, when they can exchange one message each.

20 Day Stranger uses information from the iPhone’s sensors to alert your stranger-friend when you wake up (and start moving the phone), when you’re in a car or bus (from GPS tracking), and where you are. But it isn’t totally privacy-invading: The app also takes steps to keep both people anonymous. When it shows your stranger-friend that you’re walking around somewhere, it accompanies the notification with images from a half-mile radius of where you actually are on Google Maps. Your stranger-friend might be able to figure out what area you’re in, or they might not.

Kevin Slavin, the director of Playful Systems, explained to Fast Company that the app’s goal is to introduce people online in a positive and empathetic way, rather than one that’s filled with suspicion or doubt. Though 20 Day Stranger is currently being beta tested, Playful Systems’ goal is to generally release it in the App Store. But the group is worried about getting people to adopt it all over instead of building up user bases in certain geographic areas. “There’s no one type of person what will make it useful,” Slavin said. “It’s the heterogeneous quality of everyone in aggregate. Which is a bad [promotional] strategy if you’re making commercial software.”

At this point it’s not that rare to interact frequently with someone you’ve never met in person on social media. What’s unusual it not to know their name or anything about who they are. But an honest window into another person’s life without the pressure of identity could expand your worldview and maybe even stimulate introspection. It sounds like a step up from Secret, that’s for sure.

Read the entire article here.

“I don’t see the point in measuring life in time any more… I would rather measure it in terms of what I actually achieve. I’d rather measure it in terms of making a difference, which I think is a much more valid and pragmatic measure.”

These are the inspiring and insightful words of 19 year-old, Stephen Sutton, from Birmingham in Britain, about a week before he died from bowel cancer. His upbeat attitude and selflessness during his last days captured the hearts and minds of the nation, and he raised around $5½ million for cancer charities in the process.

From the Guardian:

Few scenarios can seem as cruel or as bleak as a 19-year-old boy dying of cancer. And yet, in the case of Stephen Sutton, who died peacefully in his sleep in the early hours of Wednesday morning, it became an inspiring, uplifting tale for millions of people.

Sutton was already something of a local hero in Birmingham, where he was being treated, but it was an extraordinary Facebook update in April that catapulted him into the national spotlight.

“It’s a final thumbs up from me,” he wrote, accompanied by a selfie of him lying in a sickbed, covered in drips, smiling cheerfully with his thumbs in the air. “I’ve done well to blag things as well as I have up till now, but unfortunately I think this is just one hurdle too far.”

It was an extraordinary moment: many would have forgiven him being full of rage and misery. And yet here was a simple, understated display of cheerful defiance.

Sutton had originally set a fundraising target of £10,000 for the Teenage Cancer Trust. But the emotional impact of that selfie was so profound that, in a matter of days, more than £3m was donated.

He made a temporary recovery that baffled doctors; he explained that he had “coughed up” a tumour. And so began an extraordinary dialogue with his well-wishers.

To his astonishment, nearly a million people liked his Facebook page and tens of thousands followed him on Twitter. It is fashionable to be downbeat about social media: to dismiss it as being riddled with the banal and the narcissistic, or for stripping human interaction of warmth as conversations shift away from the “real world” to the online sphere.

But it was difficult not to be moved by the online response to Stephen’s story: a national wave of emotion that is not normally forthcoming for those outside the world of celebrity.

His social-media updates were relentlessly upbeat, putting those of us who have tweeted moaning about a cold to shame. “Just another update to let everyone know I am still doing and feeling very well,” he reassured followers less than a week before his death. “My disease is very advanced and will get me eventually, but I will try my damn hardest to be here as long as possible.”

Sutton was diagnosed with bowel cancer in September 2010 when he was 15; tragically, he had been misdiagnosed and treated for constipation months earlier.

But his response was unabashed positivity from the very beginning, even describing his diagnosis as a “good thing” and a “kick up the backside”.

The day he began chemotherapy, he attended a party dressed as a granny – he was so thin and pale, he said, that he was “quite convincing”. He refused to take time off school, where he excelled.

When he was diagnosed as terminally ill two years later, he set up a Facebook page with a bucket list of things he wanted to achieve, including sky-diving, crowd-surfing in a rubber dinghy, and hugging an animal bigger than him (an elephant, it turned out).

But it was his fundraising for cancer research that became his passion, and his efforts will undoubtedly transform the lives of some of the 2,200 teenagers and young adults diagnosed with cancer each year.

The Teenage Cancer Trust on Wednesday said it was humbled and hugely grateful for his efforts, with donations still ticking up and reaching £3.34m by mid-afternoon .

His dream had been to become a doctor. With that ambition taken from him, he sought and found new ways to help people. “Spreading positivity” was another key aim. Four days ago, he organised a National Good Gestures Day, in Birmingham, giving out “free high-fives, hugs, handshakes and fist bumps”.

Indeed, it was not just money for cancer research that Sutton was after. He became an evangelist for a new approach to life.

“I don’t see the point in measuring life in time any more,” he told one crowd. “I would rather measure it in terms of what I actually achieve. I’d rather measure it in terms of making a difference, which I think is a much more valid and pragmatic measure.”

By such a measure, Sutton could scarcely have lived a longer, richer and more fulfilling life.

Read the entire story here.

Image: Stephen Sutton. Courtesy of Google Search.

Over the coming years the words “Thwaites Glacier” will become known to many people, especially those who make their home near the world’s oceans. The thawing of Antarctic ice and the accelerating melting of its glaciers — of which Thwaites is a prime example — pose an increasing threat to our coasts, but imperil us all.

Thwaites is one of size mega-glaciers that drain into the West Antarctic’s Amundsen Sea. If all were to melt completely, as they are continuing to do, global sea-level would be projected to rise an average of 4½ feet. Astonishingly, this catastrophe in the making has passed a tipping-point — climatologists and glaciologists now tend to agree that the melting is irreversible and accelerating.

From ars technica:

Today, researchers at UC Irvine and the Jet Propulsion Laboratory have announced results indicating that glaciers across a large area of West Antarctica have been destabilized and that there is little that will stop their continuing retreat. These glaciers are all that stand between the ocean and a massive basin of ice that sits below sea level. Should the sea invade this basin, we’d be committed to several meters of sea level rise.

Even in the short term, the new findings should increase our estimates for sea level rise by the end of the century, the scientists suggest. But the ongoing process of retreat and destabilization will mean that the area will contribute to rising oceans for centuries.

The press conference announcing these results is ongoing. We will have a significant update on this story later today.

UPDATE (2:05pm CDT):

The glaciers in question are in West Antarctica, and drain into the Amundsen Sea. On the coastal side, the ends of the glacier are actually floating on ocean water. Closer to the coast, there’s what’s called a “grounding line,” where the weight of the ice above sea level pushes the bottom of the glacier down against the sea bed. From there on, back to the interior of Antarctica, all of the ice is directly in contact with the Earth.

That’s a rather significant fact, given that, just behind a range of coastal hills, all of the ice is sitting in a huge basin that’s significantly below sea level. In total, the basin contains enough ice to raise sea levels approximately four meters, largely because the ice piled in there rises significantly above sea level.

Because of this configuration, the grounding line of the glaciers that drain this basin act as a protective barrier, keeping the sea back from the base of the deeper basin. Once ocean waters start infiltrating the base of a glacier, the glacier melts, flows faster, and thins. This lessens the weight holding the glacier down, ultimately causing it to float, which hastens its break up. Since the entire basin is below sea level (in some areas by over a kilometer), water entering the basin via any of the glaciers could destabilize the entire thing.

Thus, understanding the dynamics of the grounding lines is critical. Today’s announcements have been driven by two publications. One of them models the behavior of one of these glaciers, and shows that it has likely reached a point where it will be prone to a sudden retreat sometime in the next few centuries. The second examines every glacier draining this basin, and shows that all but one of them are currently losing contact with their grounding lines.

The data come from two decades worth of data from the ESA’s Earth Remote Sensing satellites. These include radar that performs two key functions: peers through the ice to get a sense of the terrain that lies buried under the ice near the grounding line. And, through interferometry, it tracks the dynamics of the ice sheet’s flow in the area, as well as its thinning and the location of the grounding line itself. The study tracks a number of glaciers that all drain into the region: Pine Island, Thwaites, Haynes, and Smith/Kohler.

As we’ve covered previously, the Pine Island Glacier came ungrounded in the second half of the past decade, retreating up to 31km in the process. Although this was the one that made headlines, all the glaciers in the area are in retreat. Thwaites saw areas retreat up to 14km over the course of the study, Haynes retracted by 10km, and the Smith/Kohler glaciers retreated by 35km.

The retreating was accompanied by thinning of the glaciers, as ice that had been held back above sea levels in the interior spread forward and thinned out. This contributed to sea level rise, and the speakers at the press conference agreed that the new data shows that the recently released IPCC estimates for sea level rise are out of date; even by the end of this century, the continuation of this process will significantly increase the rate of sea level rise we can expect.

The real problem, however, comes later. Glaciers can establish new grounding lines if there’s a feature in the terrain, such as a hill that rises above sea level, that provides a new anchoring point. The authors see none: “Upstream of the 2011 grounding line positions, we find no major bed obstacle that would prevent the glaciers from further retreat and draw down the entire basin.” In fact, several of the existing grounding lines are close to points where the terrain begins to slope downward into the basin.

For some of the glaciers, the problems are already starting. At Pine Island, the bottom of the glacier is now sitting on terrain that’s 400 meters deeper than where the end rested in 1992, and there are no major hills between there and the basin. As far as the Smith/Kohler glaciers, the grounding line is 800 meters deeper and “its ice shelf pinning points are vanishing.”

As a result, the authors concluded that these glaciers are essentially destabilized—unless something changes radically, they’re destined for retreat into the indefinite future. But what will the trajectory of that retreat look like? In this case, the data doesn’t directly help. It needs to be fed into a model that projects the current melting into the future. Conveniently, a different set of scientists has already done this modeling.

The work focuses on the Thwaites glacier, which appears to be the most stable: there are 60-80km before between the existing terminus and the deep basin, and two or three ridges within that distance that will allow the formation of new grounding lines.

The authors simulated the behavior of Thwaites using a number of different melting rates. These ranged from a low that approximated the behavior typical in the early 90s, to a high rate of melt that is similar to what was observed in recent years. Every single one of these situations saw the Thwaites retreat into the deep basin within the next 1,000 years. In the higher melt scenarios—the ones most reflective of current conditions—this typically took only a few centuries.

The other worrisome behavior is that there appeared to be a tipping point. In every simulation that saw an extensive retreat, rates of melting shifted from under 80 gigatonnes of ice per year to 150 gigatonnes or more, all within the span of a couple of decades. In the later conditions, this glacier alone contributed half a centimeter to sea level rise—every year.

Read the entire article here.

Image: Thwaites Glacier, Antarctica, 2012. Courtesy of NASA Earth Observatory.

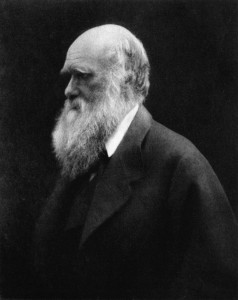

Researchers at Imperial College, London recently posed an intriguing question and have since developed a cool experiment to test it. Does artistic endeavor, such as music, follow the same principles of evolutionary selection in biology, as described by Darwin? That is, does the funkiest survive? Though, one has to wonder what the eminent scientist would have thought about some recent fusion of rap / dubstep / classical.

Researchers at Imperial College, London recently posed an intriguing question and have since developed a cool experiment to test it. Does artistic endeavor, such as music, follow the same principles of evolutionary selection in biology, as described by Darwin? That is, does the funkiest survive? Though, one has to wonder what the eminent scientist would have thought about some recent fusion of rap / dubstep / classical.

From the Guardian:

There were some funky beats at Imperial College London on Saturday at its annual science festival. As well as opportunities to create bogeys, see robots dance and try to get physics PhD students to explain their wacky world, this fascinating event included the chance to participate in a public game-like experiment called DarwinTunes.

Participants select tunes and “mate” them with other tunes to create musical offspring: if the offspring are in turn selected by other players, they “survive” and get the chance to reproduce their musical DNA. The experiment is online – you too can try to immortalise your selfish musical genes.

It is a model of evolution in practice that raises fascinating questions about culture and nature. These questions apply to all the arts, not just to dance beats. How does “cultural evolution” work? How close is the analogy between Darwin’s well-proven theory of evolution in nature and the evolution of art, literature and music?

The idea of cultural evolution was boldly defined by Jacob Bronowski as our fundamental human ability “not to accept the environment but to change it”. The moment the first stone tools appeared in Africa, about 2.5m years ago, a new, faster evolution, that of human culture, became visible on Earth: from cave paintings to the Renaissance, from Galileo to the 3D printer, this cultural evolution has advanced at breathtaking speed compared with the massive periods of time it takes nature to evolve new forms.

In DarwinTunes, cultural evolution is modelled as what the experimenters call “the survival of the funkiest”. Pulsing dance beats evolve through selections made by participants, and the music (it is claimed) becomes richer through this process of selection. Yet how does the model really correspond to the story of culture?

One way Darwin’s laws of nature apply to visual art is in the need for every successful form to adapt to its environment. In the forests of west and central Africa, wood carving was until recent times a flourishing art form. In the islands of Greece, where marble could be quarried easily, stone sculpture was more popular. In the modern technological world, the things that easily come to hand are not wood or stone but manufactured products and media images – so artists are inclined to work with the readymade.

At first sight, the thesis of DarwinTunes is a bit crude. Surely it is obvious that artists don’t just obey the selections made by their audience – that is, their consumers. To think they do is to apply the economic laws of our own consumer society across all history. Culture is a lot funkier than that.

Yet just because the laws of evolution need some adjustment to encompass art, that does not mean art is a mysterious spiritual realm impervious to scientific study. In fact, the evolution of evolution – the adjustments made by researchers to Darwin’s theory since it was unveiled in the Victorian age – offers interesting ways to understand culture.